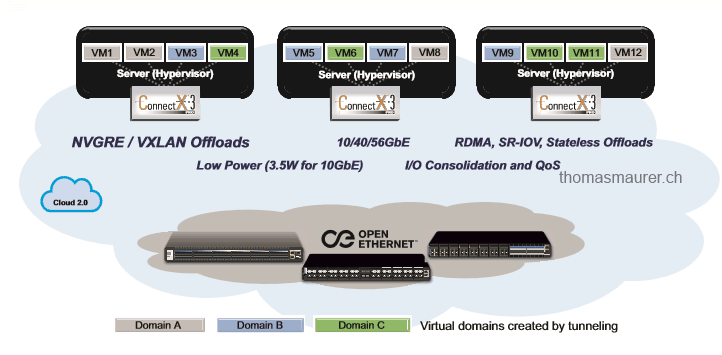

At the moment I spend a lot of time working with Hyper-V Network Virtualization in Hyper-V, System Center Virtual Machine Manager and with the new Network Virtualization Gateway. I am also creating some architecture design references for hosting providers which are going to use Hyper-V Network Virtualization and SMB as storage. If you are going for any kind of Network Virtualization (Hyper-V Network Virtualization or VXLAN) you want to make sure you can offload NVGRE traffic to the network adapter.

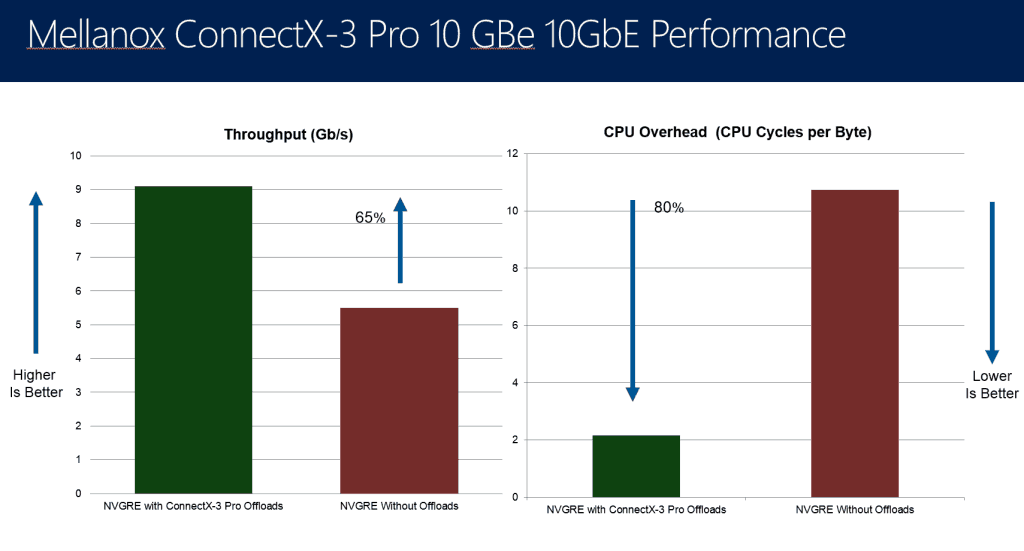

Well the great news here is that the Mellanox ConnectX-3 Pro not only offers RDMA (RoCE), which is used for SMB Direct, the adapter also offers hardware offloads for NVGRE and VXLAN encapsulated traffic. This is great and should improve the performance of Network Virtualization dramatically.

More information on the Mellanox ConnectX-3 Pro:

ConnectX-3 Pro 10/40/56GbE adapter cards with hardware offload engines to Overlay Networks (“Tunneling”), provide the highest performing and most flexible interconnect solution for PCI Express Gen3 servers used in public and private clouds, enterprise data centers, and high performance computing.

Public and private cloud clustered databases, parallel processing, transactional services, and high-performance embedded I/O applications will achieve significant performance improvements resulting in reduced completion time and lower cost per operation.

Benefits:

- 10/40/56Gb/s connectivity for servers and storage

- World-class cluster, network, and storage performance

- Cutting edge performance in virtualized overlay networks (VXLAN and NVGRE)

- Guaranteed bandwidth and low-latency services

- I/O consolidation

- Virtualization acceleration

- Power efficient

- Scales to tens-of-thousands of nodes

Key features:

- 1us MPI ping latency

- Up to 40/56GbE per port

- Single- and Dual-Port options available

- PCI Express 3.0 (up to 8GT/s)

- CPU offload of transport operations

- Application offload

- Precision Clock Synchronization

- HW Offloads for NVGRE and VXLAN encapsulated traffic

- End-to-end QoS and congestion control

- Hardware-based I/O virtualization

- RoHS-R6

Virtualized Overlay Networks — Infrastructure as a Service (IaaS) cloud demands that data centers host and serve multiple tenants, each with their own isolated network domain over a shared network infrastructure. To achieve maximum efficiency, data center operators are creating overlay networks that carry traffic from individual Virtual Machines (VMs) in encapsulated formats such as NVGRE and VXLAN over a logical “tunnel,” thereby decoupling the workload’s location from its network address. Overlay Network architecture introduces an additional layer of packet processing at the hypervisor level, adding and removing protocol headers for the encapsulated traffic. The new encapsulation prevents many of the traditional “offloading” capabilities (e.g. checksum, TSO) from being performed at the NIC. ConnectX-3 Pro effectively addresses the increasing demand for an overlay network, enabling superior performance by introducing advanced NVGRE and VXLAN hardware offload engines that enable the traditional offloads to be performed on the encapsulated traffic. With ConnectX-3 Pro, data center operators can decouple the overlay network layer from the physical NIC performance, thus achieving native performance in the new network architecture.

I/O Virtualization — ConnectX-3 Pro SR-IOV technology provides dedicated adapter resources and guaranteed isolation and protection for virtual machines (VMs) within the server. I/O virtualization with ConnectX-3 Pro gives data center managers better server utilization while reducing cost, power, and cable complexity.

RDMA over Converged Ethernet — ConnectX-3 Pro utilizing IBTA RoCE technology delivers similar low-latency and high- performance over Ethernet networks. Leveraging Data Center Bridging capabilities, RoCE provides efficient low latency RDMA services over Layer 2 Ethernet. With link-level interoperability in existing Ethernet infrastructure, Network Administrators can leverage existing data center fabric management solutions.

Sockets Acceleration — Applications utilizing TCP/UDP/IP transport can achieve industry- leading throughput over 10/40/56GbE. The hardware-based stateless offload engines in ConnectX-3 Pro reduce the CPU overhead of IP packet transport. Socket acceleration software further increases performance for latency sensitive applications.

Storage Acceleration — A consolidated compute and storage network achieves significant cost-performance advantages over multi-fabric networks. Standard block and file access protocols can leverage Ethernet or RDMA for high-performance storage access.

Software Support – All Mellanox adapter cards are supported by Windows, Linux distributions, VMware, FreeBSD, Ubuntu, and Citrix XenServer. ConnectX-3 Pro adapters support OpenFabrics-based RDMA protocols and software and are compatible with configuration and management tools from OEMs and operating system vendors.

Tags: Hyper-V, Mellanox, Microsoft, Network, Network Adapter, Network Virtualization, NIC, NVGRE, NVGRE Offloading, Offloading, RDMA, SCVMM, SMB, SMB Direct, System Center, System Center 2012, System Center 2012 R2, Virtual Machine, Virtual Machine Manager, Virtualization, VMM, Windows Server, Windows Server 2012, Windows Server 2012 R2 Last modified: September 2, 2018