Cisco UCS supports RoCE for Microsoft SMB Direct

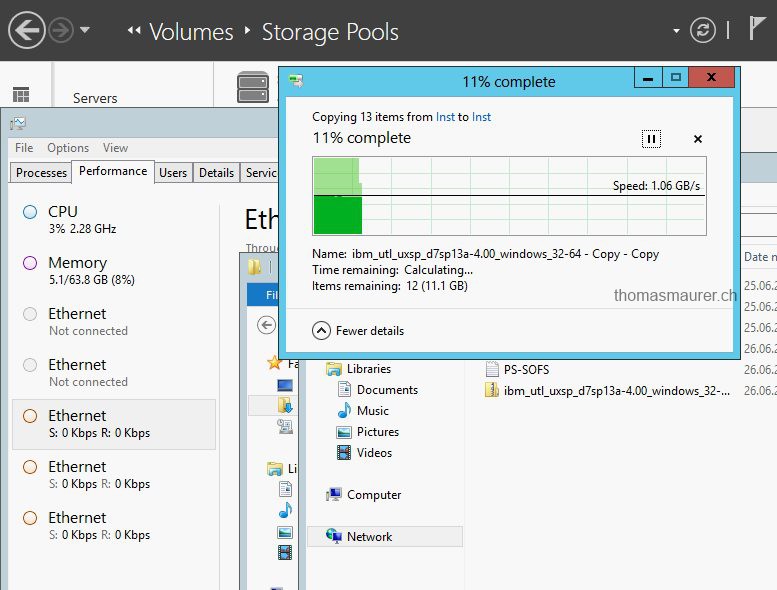

As you may know we use SMB as the storage protocol for several Hyper-V deployments using Scale-Out...

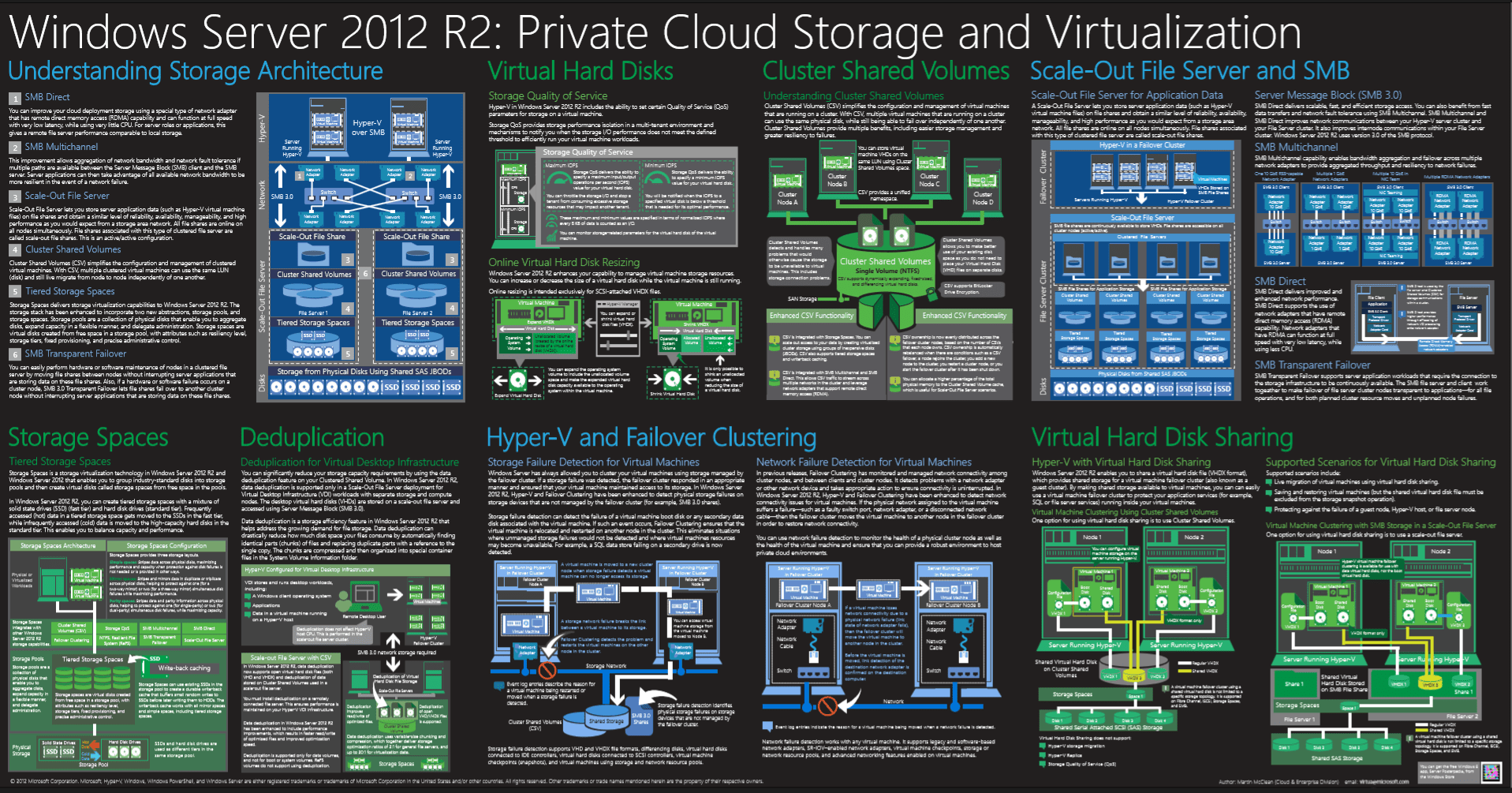

Windows Server 2012 R2 Private Cloud Virtualization and Storage Poster and Mini-Posters

Yesterday Microsoft released the Windows Server 2012 R2 Private Cloud Virtualization and Storage Poster and Mini-Posters. This includes...

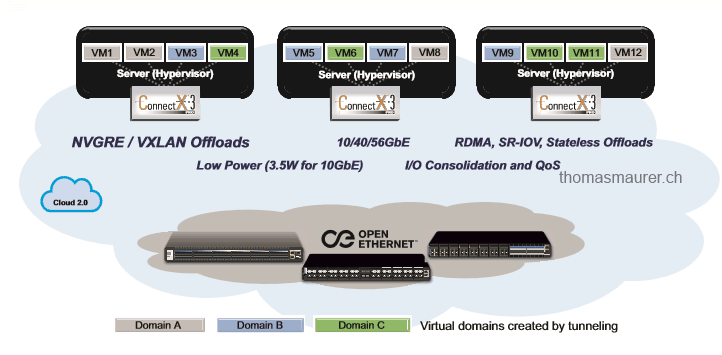

Hyper-V Network Virtualization: NVGRE Offloading

At the moment I spend a lot of time working with Hyper-V Network Virtualization in Hyper-V, System Center Virtual Machine Manager and with...

Hyper-V over SMB: SMB Direct (RDMA)

Another important part of SMB 3.0 and Hyper-V over SMB is the performance. In the past you could...

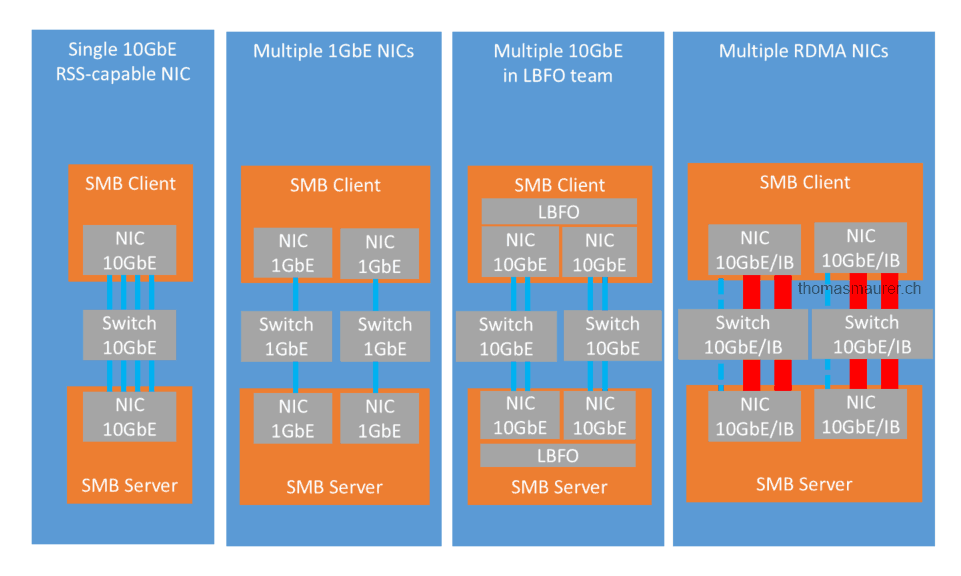

Hyper-V over SMB: SMB Multichannel

As already mentioned in my first post, SMB 3.0 comes with a lot of different supporting features which are increasing the functionality in...