Sort Windows Network Adapter by PCI Slot via PowerShell

If you work with Windows, Windows Server or Hyper-V you know that before Windows Server 2012...

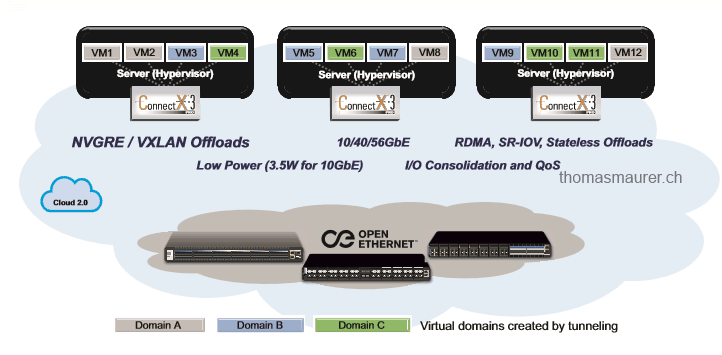

Hyper-V Network Virtualization: NVGRE Offloading

At the moment I spend a lot of time working with Hyper-V Network Virtualization in Hyper-V, System Center Virtual Machine Manager and with...

Hyper-V Converged Fabric with System Center 2012 SP1 – Virtual Machine Manager

This blog post is a part of a series of blog posts about System Center 2012 Virtual Machine Manager, I am writing together with Michel...

Windows Server 2012 Hyper-V Converged Fabric

In Windows Server 2008 R2 we had some really simple configurations and best practices for Hyper-V...

Windows Server 2012 NIC Naming

Some weeks ago I wrote a blog post how you can configure Network Adapters on a Hyper-V host via PowerShell. I mentioned that the NICs in...

Configure Hyper-V Host Network Adapters Like A Boss

If you are working a lot with Hyper-V and Hyper-V Clustering you know that something that takes a lot of time is configure the Hyper-V...

Windows Server 2012 – CDN (Consistent Device Naming)

There is a new feature coming with Windows Server 8 called Consistent Device Naming (CDN) which...

Windows Server 8 NIC Teaming

With the Developer Preview release of Windows Server 8 which Microsoft released at BUILD, Microsoft showed the new integrated NIC Teaming...