My Hardware Recommendations for Windows Server 2016

Many people are right now asking me about what they have to look out for, if they are going to buy...

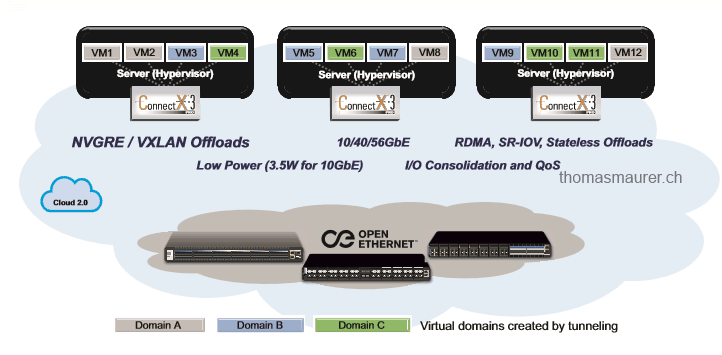

Hyper-V Network Virtualization: NVGRE Offloading

At the moment I spend a lot of time working with Hyper-V Network Virtualization in Hyper-V, System Center Virtual Machine Manager and with...