Cloud operations for Windows Server through Azure Arc

Running Windows Server on-premises or at the edge? Learn how you can leverage Azure management...

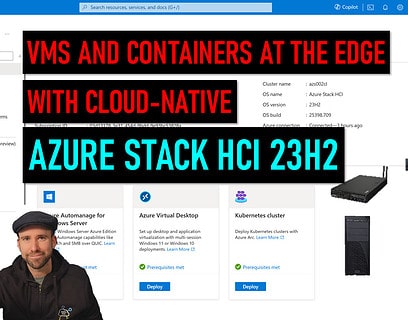

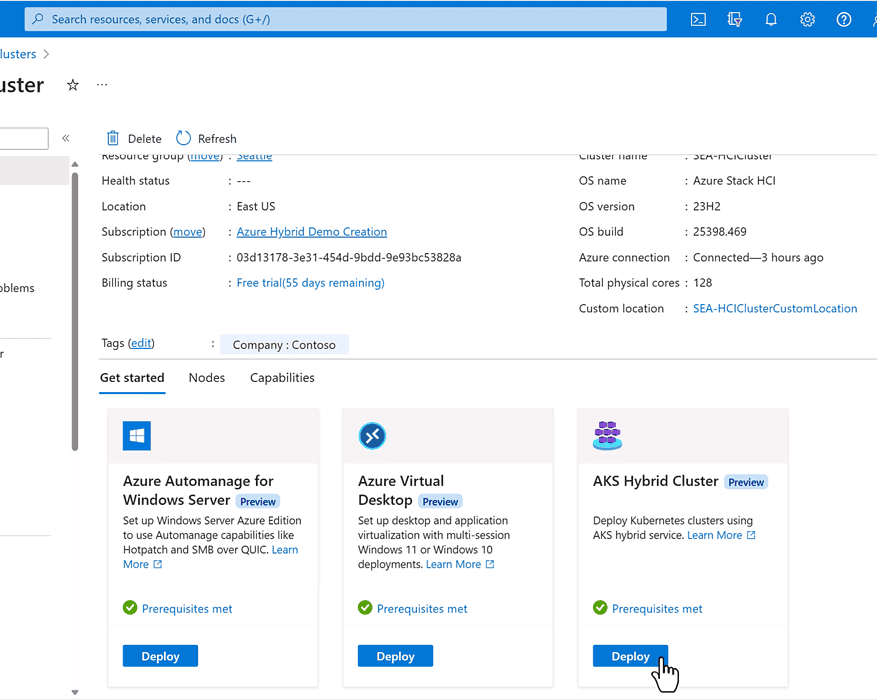

Azure Stack HCI 23H2 – VMs and containers at the edge

At Microsoft Ignite 2023, Microsoft announced the latest version of Azure Stack HCI 23H2. In this video we are going to have a look on how...

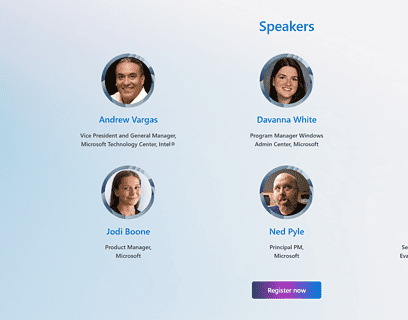

Speaking at the Windows Server Summit 2024

Today I am proud to share that I will be speaking at the Microsoft Windows Server Summit 2024. The Windows Server Summit 2024 is an...

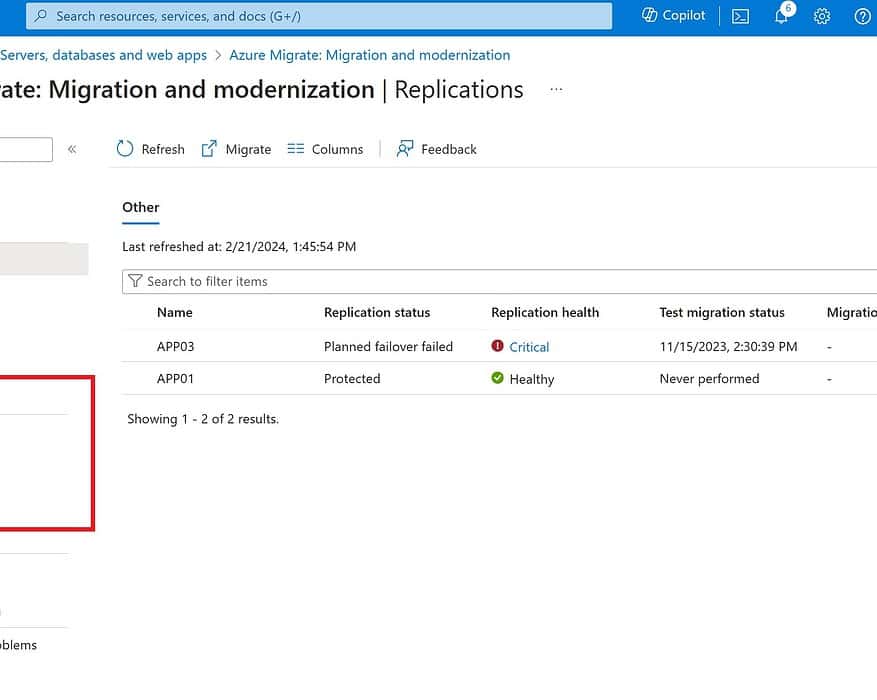

Migrate VMs to Azure Stack HCI

One question I get asked a lot is, how do I migrate Hyper-V or VMware VMs (virtual machines) to...

SCVMM management for Azure Stack HCI 23H2

The System Center team just shared some information on System Center Virtual Machine Manager (SCVMM) supporting the latest Azure Stack HCI,...

Joining Azure Adaptive Cloud Experiences (ACX) Evaluation and Community Enablement

I am excited to announce that I have joined the The Adaptive Cloud Experiences (ACX) Evaluation and Community Enablement team as a Senior...

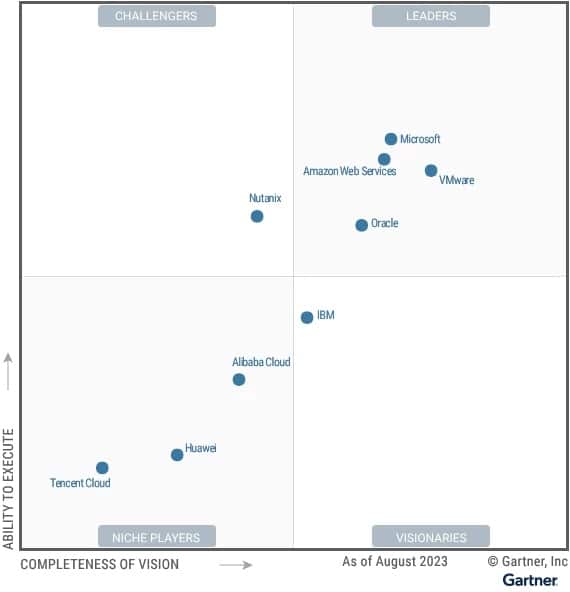

Microsoft is a Leader in the Gartner Magic Quadrant for Distributed Hybrid Infrastructure with Azure Stack HCI

End of 2023, Microsoft has been recognized as a Leader in the 2023 Gartner Magic Quadrant for...

Live Session: Windows Server upgrade and migration, on-prem, to and in Azure!

Are you ready to learn how to upgrade and migrate your Windows Server workloads to the cloud? Join us for a live session (Wednesday...

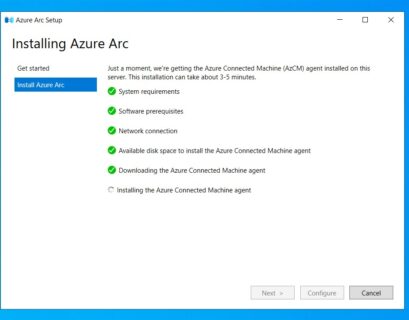

Azure Arc Setup on Windows Server

With Windows Server 2022 and later you now get the Azure Arc Setup integrated on your Windows Server. This allows you to onboard your...

Azure Hybrid, Edge, Adaptive Cloud News from Microsoft Ignite

Today I’m going to share with you some of the exciting Azure hybrid, multicloud, and edge...