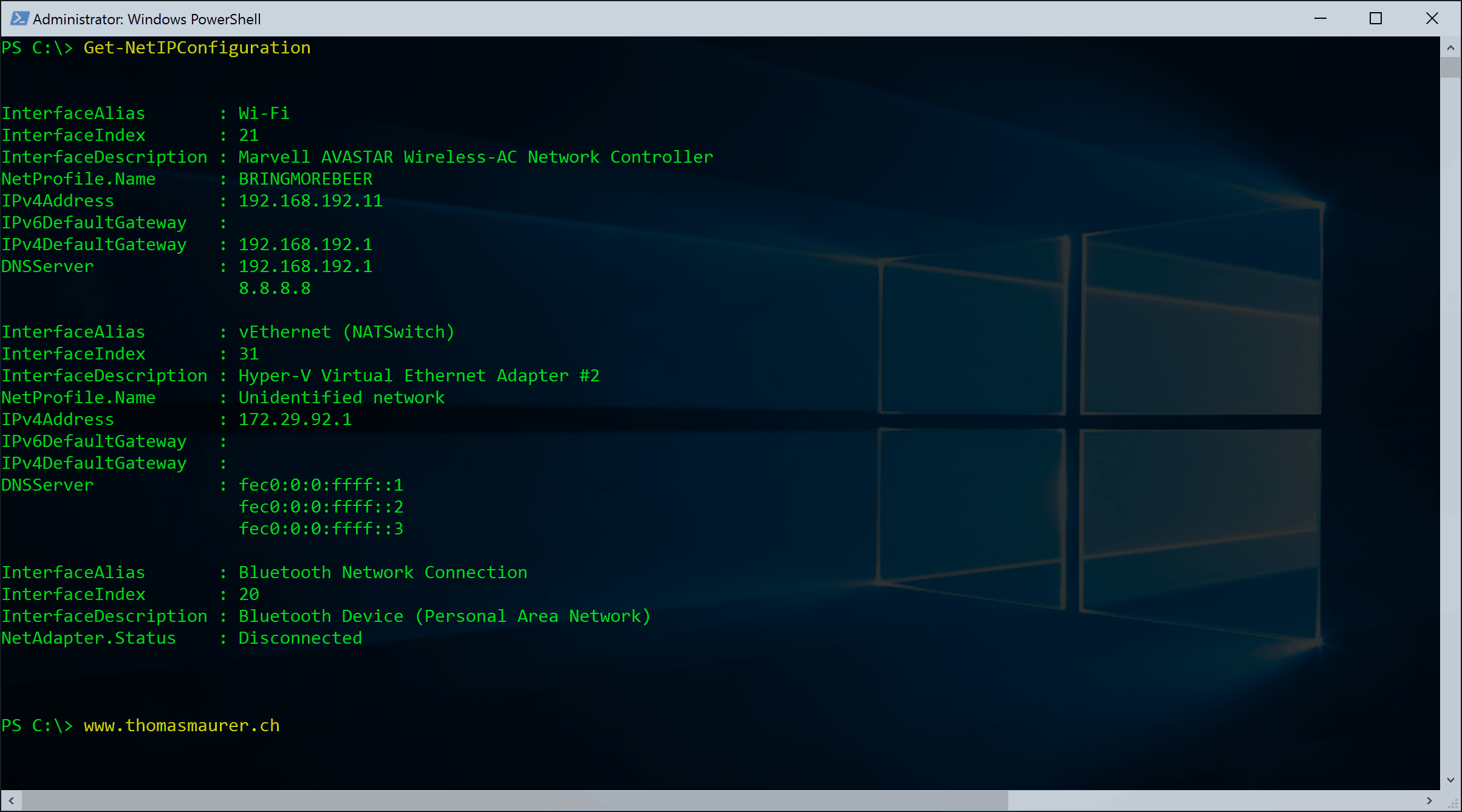

Basic Networking PowerShell cmdlets cheatsheet to replace netsh, ipconfig, nslookup and more

Around 4 years ago I wrote a blog post about how to Replace netsh with Windows PowerShell which...

Cisco UCS and Hyper-V Enable Stateless Offloads with NVGRE

As I already mentioned I did several Hyper-V and Microsoft Windows Server projects with Cisco UCS. With Cisco UCS you can now configure...

Sort Windows Network Adapter by PCI Slot via PowerShell

If you work with Windows, Windows Server or Hyper-V you know that before Windows Server 2012 Windows named the network adapters randomly....

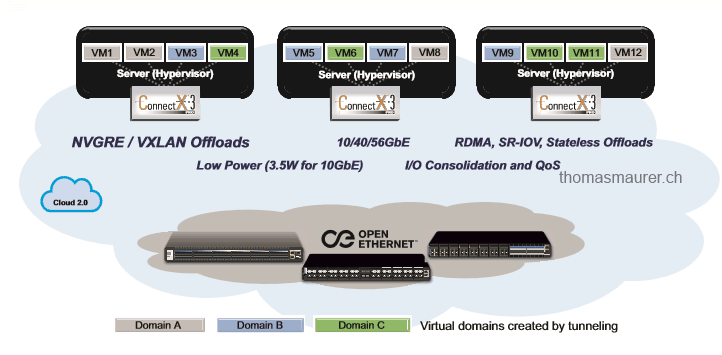

Hyper-V Network Virtualization: NVGRE Offloading

At the moment I spend a lot of time working with Hyper-V Network Virtualization in Hyper-V, System...