As already mentioned in my first post, SMB 3.0 comes with a lot of different supporting features which are increasing the functionality in terms of performance, security, availability and backup. Here are some quick notes about some of the features which make the whole Hyper-V over SMB scenario work, this time SMB Multichannel.

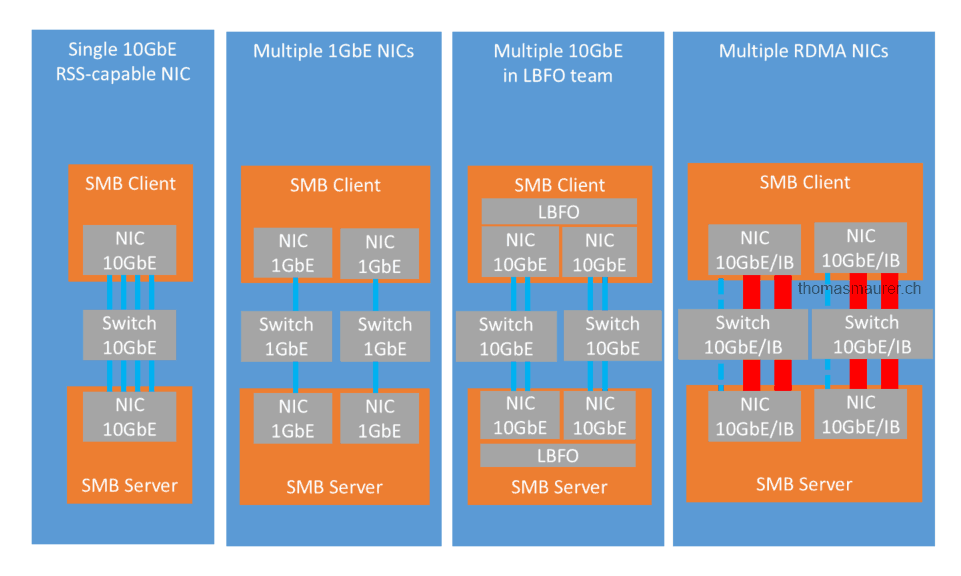

SMB Multichannel was designed to solve two problems, first make the path from the Hyper-V host (SMB Client) to the File Server or Scale-Out File Server (SMB Server) redundant and get more performance by using multiple network paths. If you are using iSCSI or Fiber Channel you use MPIO (Multipath-IO) to use multiple available paths to the storage. For normal SMB traffic you may be used NIC Teaming to achieve that. SMB Multichannel is a much easier solution which offers great performance. SMB Multichannel will automatically make use of different network adapters which are configured with different IP subnets.

In my tests and the environment I have build for customers, I have seen great performance with SMB Mutlichannel. It works “better” as NIC Teaming because most of the time you just get one active network interface except you use LACP and stuff like which requires the configuration of network switches with cheap switches you may lose redundancy. The same with MPIO, MPIO most of the time works great but you can sometimes not get the performance you should get in an active/active configuration. With SMB Multichannel I can simply bundle two or even more NICs together and Multichannel will make use of all of them.

Btw. SMB Mutlichannel is also a must have if you are using RDMA NICs, because of the redundancy you only get with SMB Multichannel.

SMB Mutlichannel and NIC Teaming

SMB Mutlichannel does also work in combination with Windows Server NIC Teaming. But the feature SMB Direct (RDMA) does not allow you to use NIC Teaming or the Hyper-V Virtual Switch, because you would loose the RDMA functionality.

How do you setup SMB Multichannel?

Well the setup of SMB Multichannel is quite easy because it’s enabled by default, but there are some things you should now about SMB Multichannel for designing your environment or just to troubleshoot an installation.

Verify SMB Multichannel

Verify that SMB Multichannel is active on the client

Get-SmbClientConfiguration | Select EnableMultichannel

Verify that SMB Multichannel is active on the server

Get-SmbServerConfiguration | Select EnableMultichannel

You can also disable or enable Mutlichannel if you need to.

Set-SmbServerConfiguration -EnableMultiChannel $false Set-SmbClientConfiguration -EnableMultiChannel $false Set-SmbServerConfiguration -EnableMultiChannel $true Set-SmbClientConfiguration -EnableMultiChannel $true

To see if all the right connections are used you can use the following commands on the client to see all the SMB connections and verifiy that all SMB Mutlichannel connections are open and using the write protocol version.

Get-SmbConnection Get-SmbMultichannelConnection

SMB Mutlichannel Constraint

Another thing you have to know about SMB Mutlichannel is how you limit the SMB connection to specific network interfaces. For example, you have a Hyper-V hosts which has an 1 gigabit network adapter for management and stuff and you have two 10Gbit (or greater) RDMA interfaces which should be used for the connection to the storage, you want to make sure that the Hyper-V hosts only uses the RDMA network interface to connected to the storage. With a simple PowerShell cmdlet you can limit the Hyper-V host to only access a file shares with the RDMA interfaces.

- Fileshare where the Virtual Machines are stored: \\SMB01\VMs01

- Name of the 1 GBit network interface for Management and stuff: Management

- Name of the RDMA interfaces: RDMA01 and RDMA02

New-SmbMultichannelConstraint -InterfaceAlias RDMA01, RDMA02 -ServerName SMB01

Make sure you run this on every Hyper-V host, not on the SMB file server.

Well there is a little bit more behind SMB Multichannel but this should give you a great jumpstart into this feature. If you want to know more about SMB Multichannel checkout Jose Barretos (Microsoft Corp.) blog post on The basics of SMB Multichannel.

Tags: Cloud, Fileshare, Hyper-V, Microsoft, Multichannel, PowerShell, Private Cloud, RDMA, SMB, SMB Multichannel, Storage, Virtual Machine, Windows Server, Windows Server 2012, Windows Server 2012 R2 Last modified: September 2, 2018

I just wanted to post what I have discovered while deploying a 3 Node HyperV 3 Cluster with 2 Clustered SOFS. My nodes are interconnected via 6 x 54Gb RDMA connections and the statement above:

“SMB Multichannel will automatically make use of different network adapters which are configured with different IP subnets.”

I have found that it is incorrect where it is stated that the adapters have to be configured with different IP subnets. I tried this approach and it does not work since the subnets do not know know to reach each other. Unless you have an intermediary router or a managed switch that can perform VLAN routing this will not give you SMB Multichannel functionality.

The only possible scenario where this might be an exception is if you are using your RDMA capable interfaces to route all traffic (Public, Live Migration, Private, Replica, etc). In this scenario you would present the interfaces to Cluster Manager and you would have multiple DNS entries for your hosts. Is this how it was set up in your environment?

Well to the subnet part: for example you have 3 different subnets. Each host has these three subnets available. so host a can contact host b via subnet 1 or subnet 2 or subnet 3. No routing etc needed.

Yes if you use a SOFS cluster you get multiple DNS entries for your SOFS nodes and your SOFS Cluster Role (not the Cluster Object and not the Hyper-V hosts).

I have setup and verified the functionality of smb multi channel between a single smb file server with 6 1Gb NICs, and a cluster node with 6 1Gb NICs. It works like a charm and uses all 6 NICs effectively giving me 6Gb/sec xfer speed. They are RSS capable NICs, not RDMA capable. However, when I place all 6 NICs on both servers in a team, I no longer get the 6Gb speed, i only get 1Gb. the team is switch dependent, using address hash, and i have setup LACP on my switch making a channel group for each server (the smb server and the cluster node). Any idea why multichannel would work without the team but wont work with the team????

Well multichannel use a different way to load balance traffic. So for SMB Multichannel is kind the way to go, for other things in Windows Server 2012 R2 I recommend using Switch Independent teaming in “Dynamic” Mode.

Do you mean that Cluster Multichannel will not work without the correct DNS entries?

Make sure you DNS is also setup correctly. So all SMB interfaces should be registered in DNS, otherwise you risk a single point of failure. And if you working with a SOFS (Scale-Out File Server) Cluster Multichannel will not work “without” the correct DNS entries.

Also, how do you address this issue if your RDMA interfaces are Infiniband since IB cannot forward Ethernet traffic?

How about Windows Server 2016?

How about the DNS configuration on the SMB NIC’s

No DNS needed on the SMB Interfaces

So found in internet that with Windows Server 2016 you can have on multiple NIC same subnet with SMB Multichannel. Is this right?

This is correct, that works with 2016.

This is where iSCSI and SMB are different. SMB Channels can also be used for Cluster traffic. It works great especially if you use RDMA you can get extra performance for Cluster traffic.

On internet I find some blogs they say you need in Clustering to set Clusternetwork = None for iSCSI is that also for SMB Multichannel?

How about SOFS cluster?

We have 4 nodes with in all the nodes 3 x 10 GB NIC one for Management en two for SMB traffic. Do we need to put only Cluster and Client on the management network and on the SMB NICs Cluster Only?