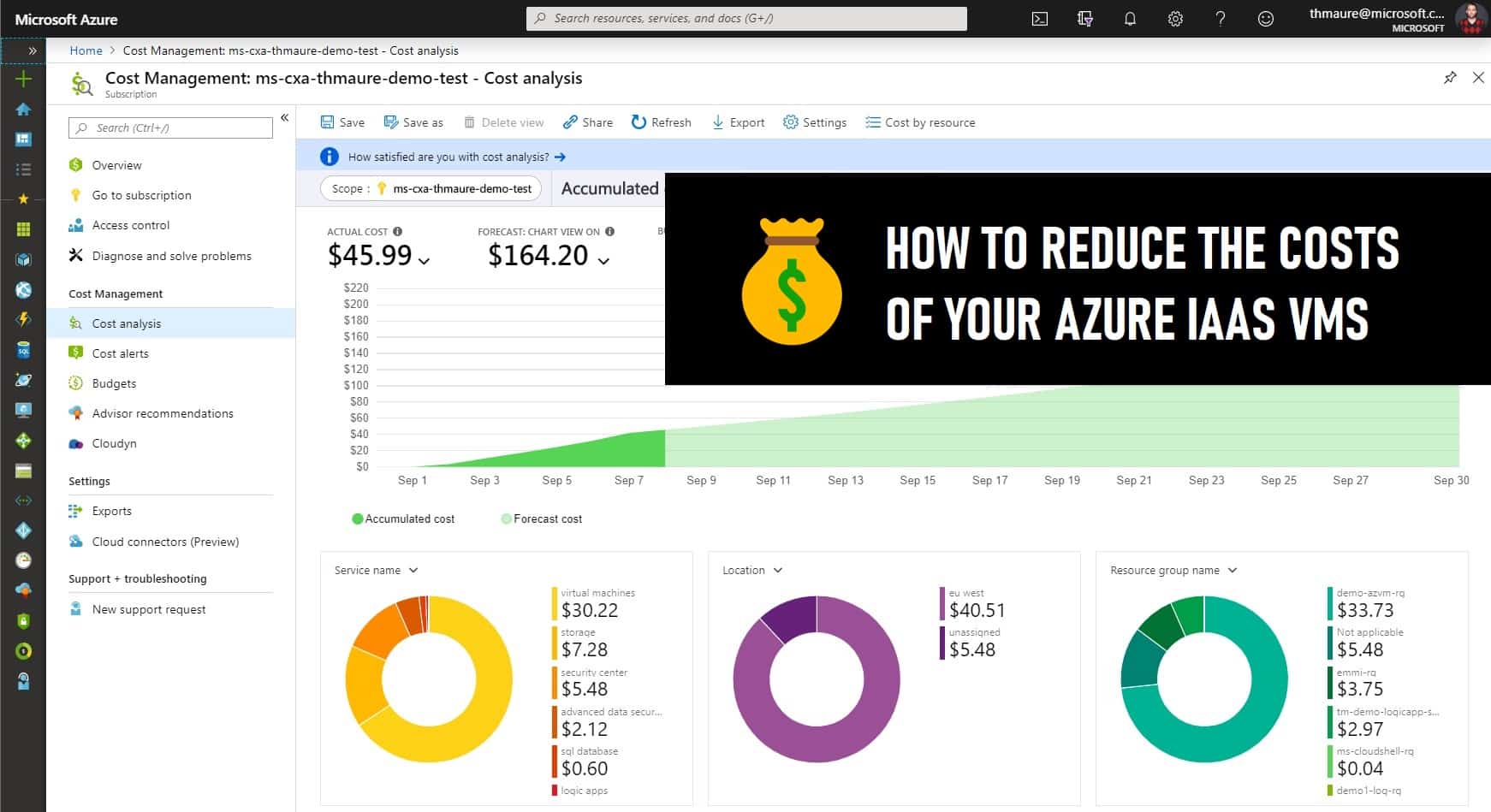

How to Reduce the Costs of your Azure IaaS VMs

Azure Infrastructure-as-a-service (IaaS) offers significant benefits over traditional...

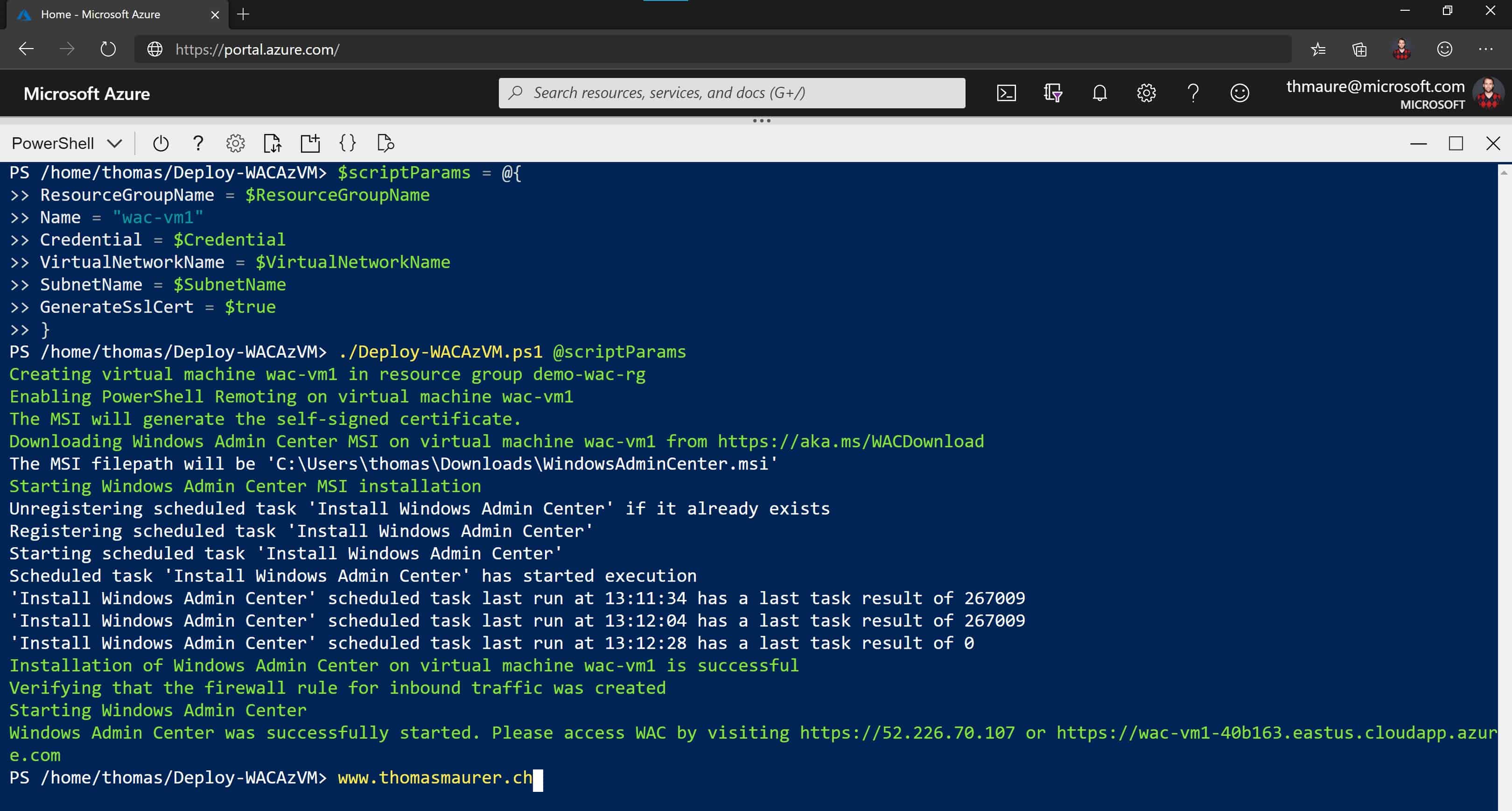

Deploy and Install Windows Admin Center in an Azure VM

The great thing about Windows Admin Center (WAC) you manage every Windows Server doesn’t matter where it is running. You can manage...

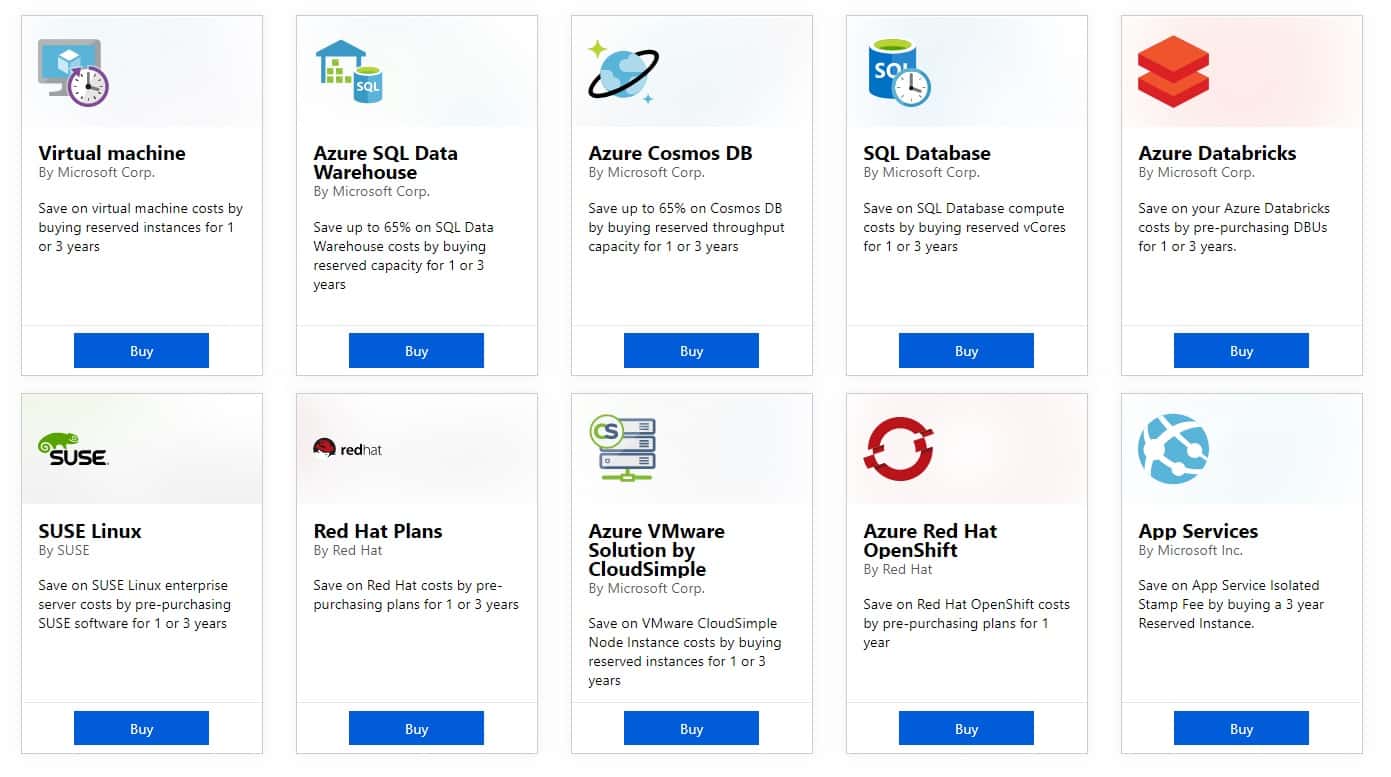

How to Save Money on Azure using Azure Reservations

I wanted to quickly share something which existing for quite some time but talking with customers still a lot of people don’t know...

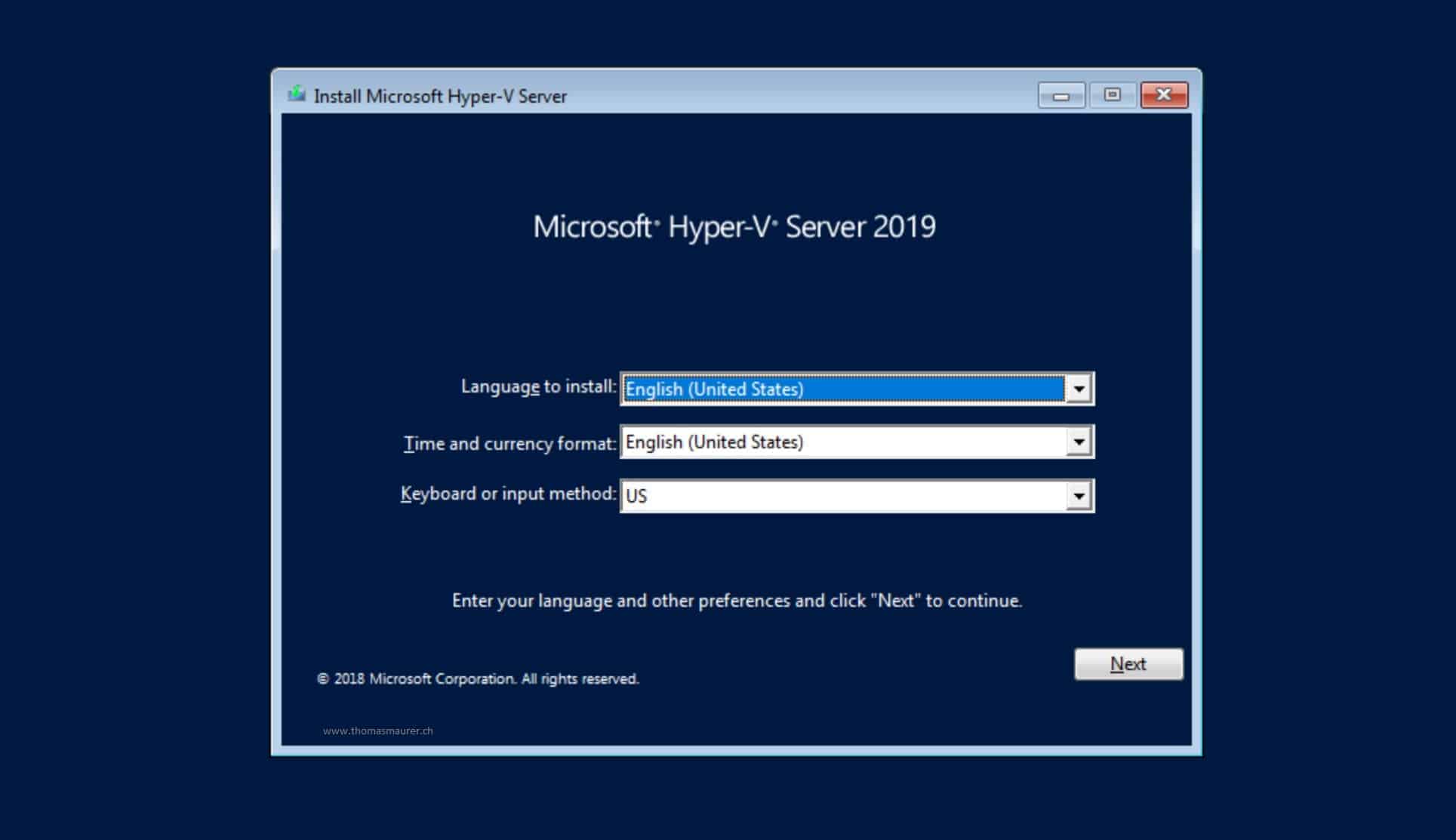

Download Hyper-V Server 2019 now

A lot of people have been waiting for this. After the release of Windows Server 2019 back in...

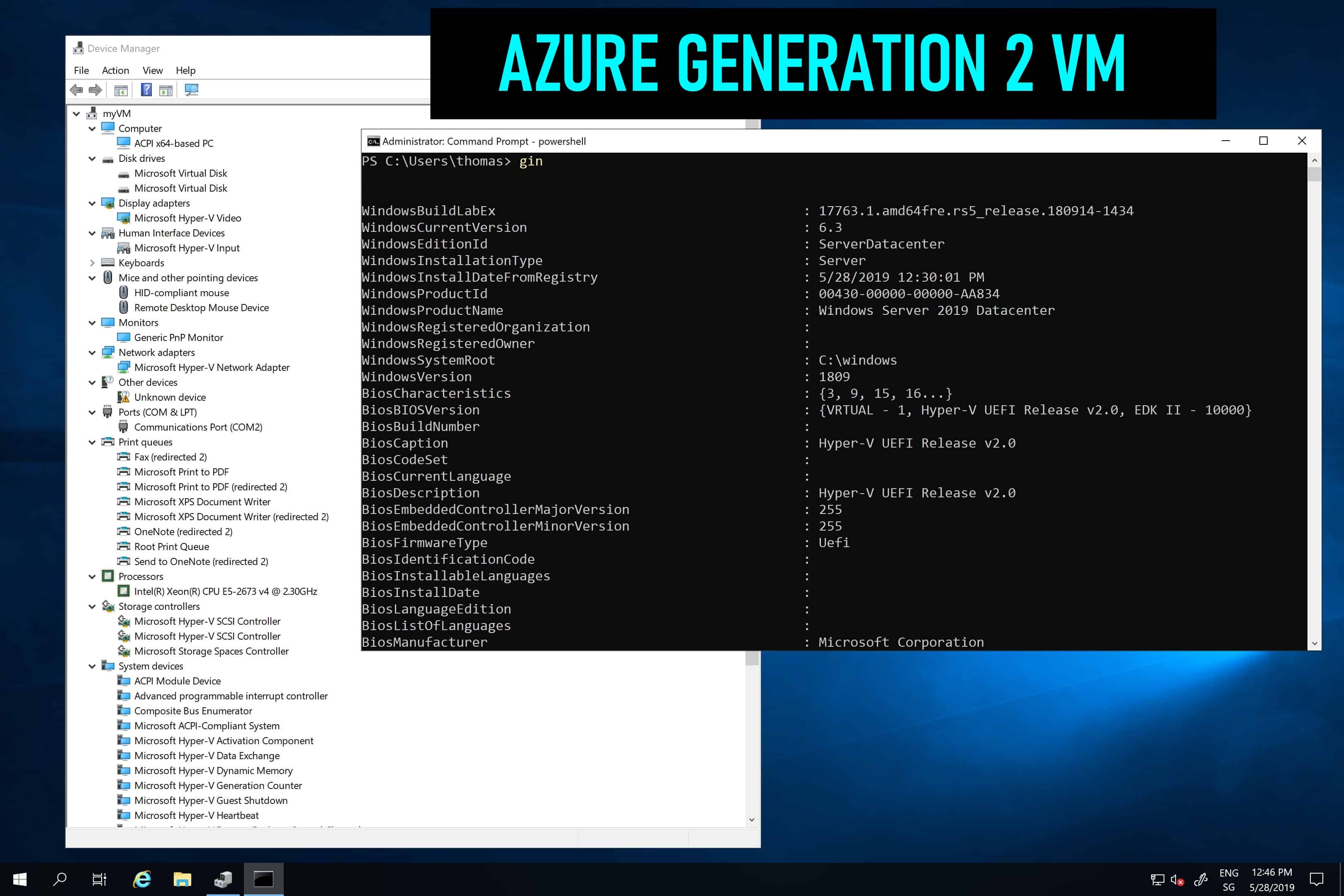

Generation 2 VM support on Azure – and why should I care?

A couple of days ago Microsoft announced the public preview of Generation 2 virtual machines on Azure. Generation 2 virtual machines...

Microsoft and Canonical create Azure optimized Ubuntu Kernel

Ubuntu is a popular choice for Virtual Machines running on Microsoft Azure and Hyper-V. Yesterday Microsoft and Canonical that they will...

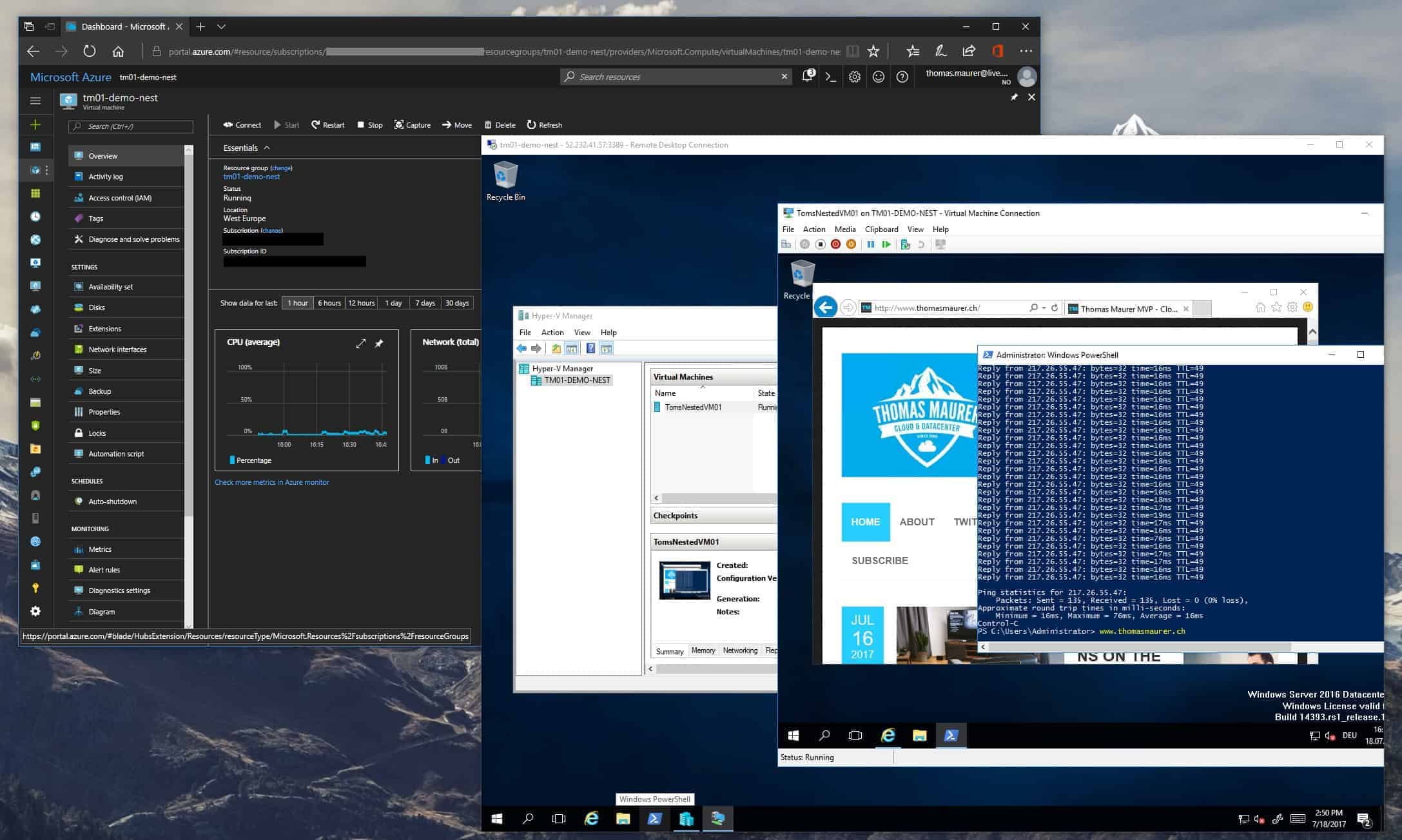

How to set up Nested Virtualization in Microsoft Azure

At the Microsoft Build conference this year, Microsoft announced Nested Virtualization for Azure...

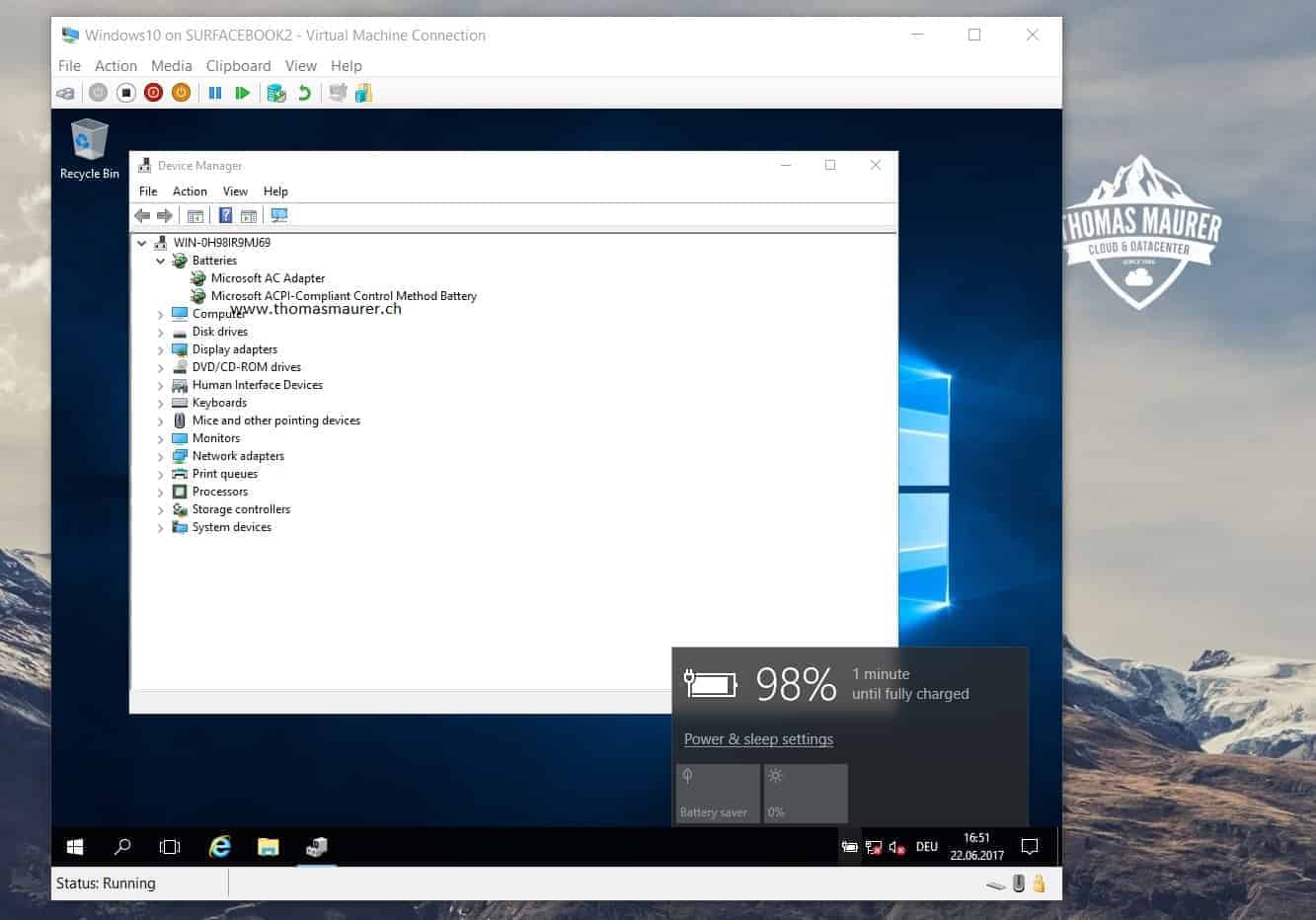

Hyper-V gets Virtual Battery support

Last week Microsoft announced Windows 10 Insider Preview build 16215 which added a lot of new features to Windows 10. With Windows 8...

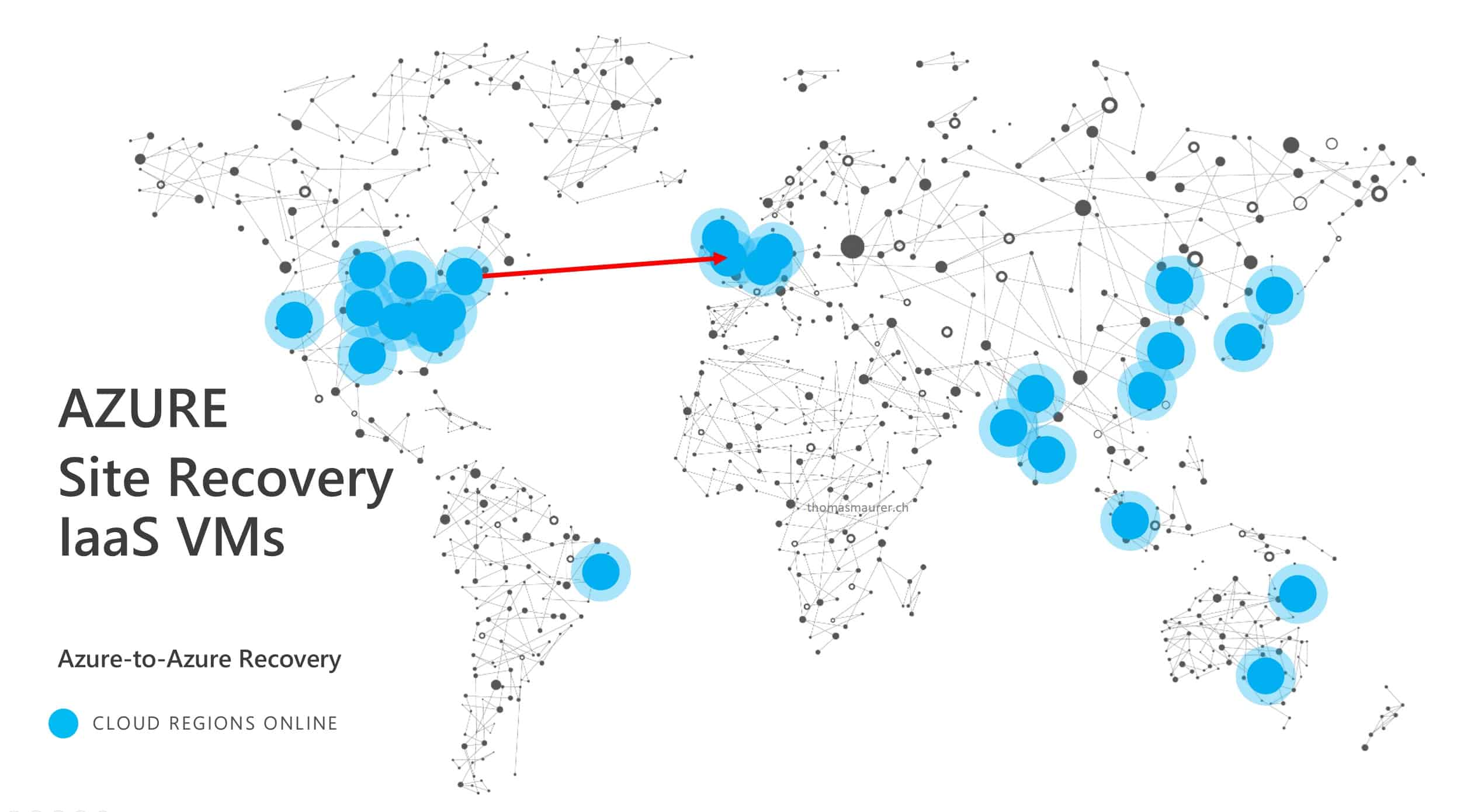

Disaster recovery for Azure IaaS virtual machines using ASR

Microsoft today announced the public preview of disaster recovery for Azure IaaS virtual machines. This is Azure Site Recovery (ASR) for...

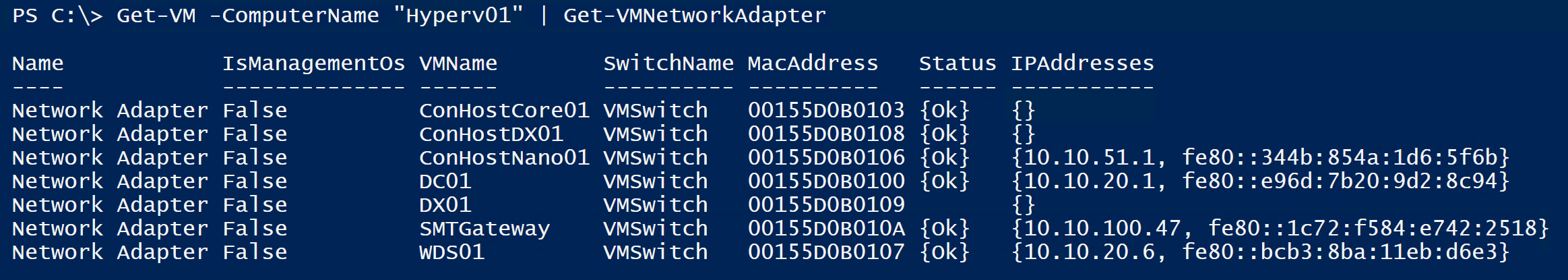

PowerShell One-liner to list IP Addresses of Hyper-V Virtual Machines

Here a very quick PowerShell command to list all the Virtual Network Adapters, including IP...