Hyper-V over SMB: SMB Direct (RDMA)

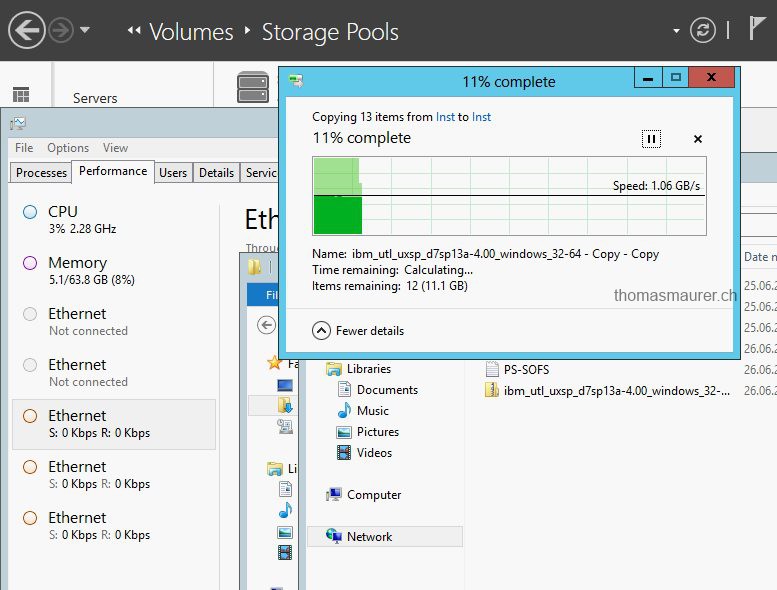

Another important part of SMB 3.0 and Hyper-V over SMB is the performance. In the past you could...

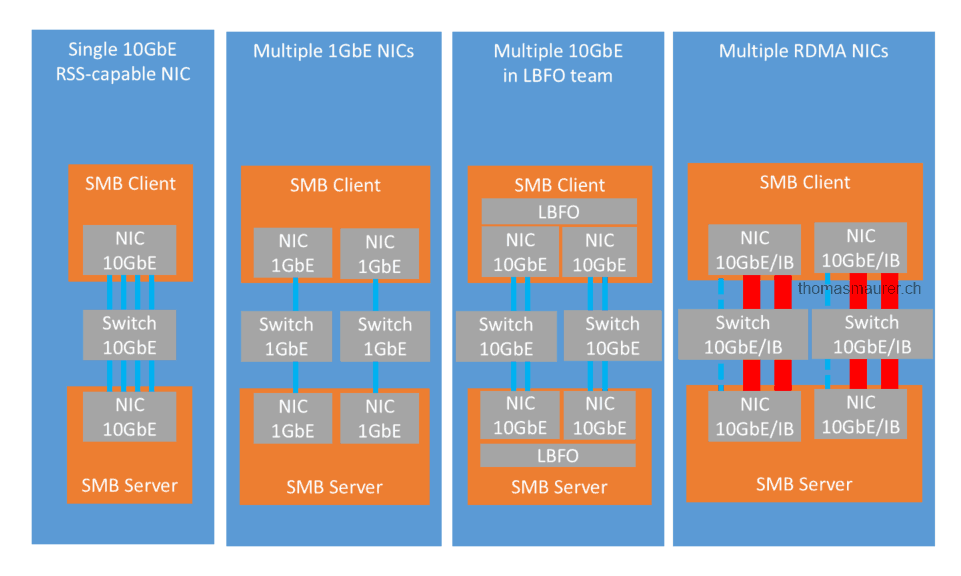

Hyper-V over SMB: SMB Multichannel

As already mentioned in my first post, SMB 3.0 comes with a lot of different supporting features which are increasing the functionality in...

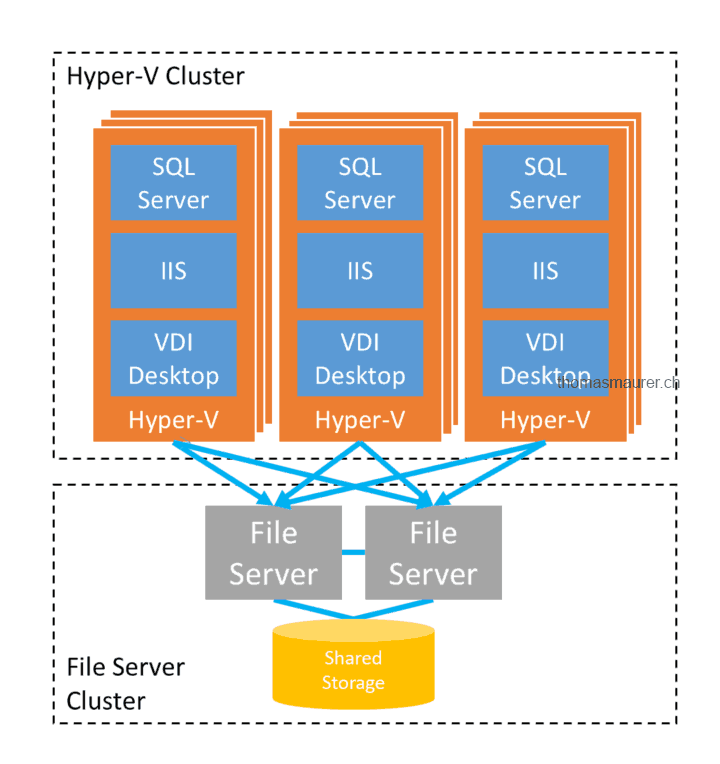

What is Hyper-V over SMB?

With the release of Windows Server 2012 Microsoft offers a new way to store Hyper-V Virtual Machine on a shared storage. In Windows Server...

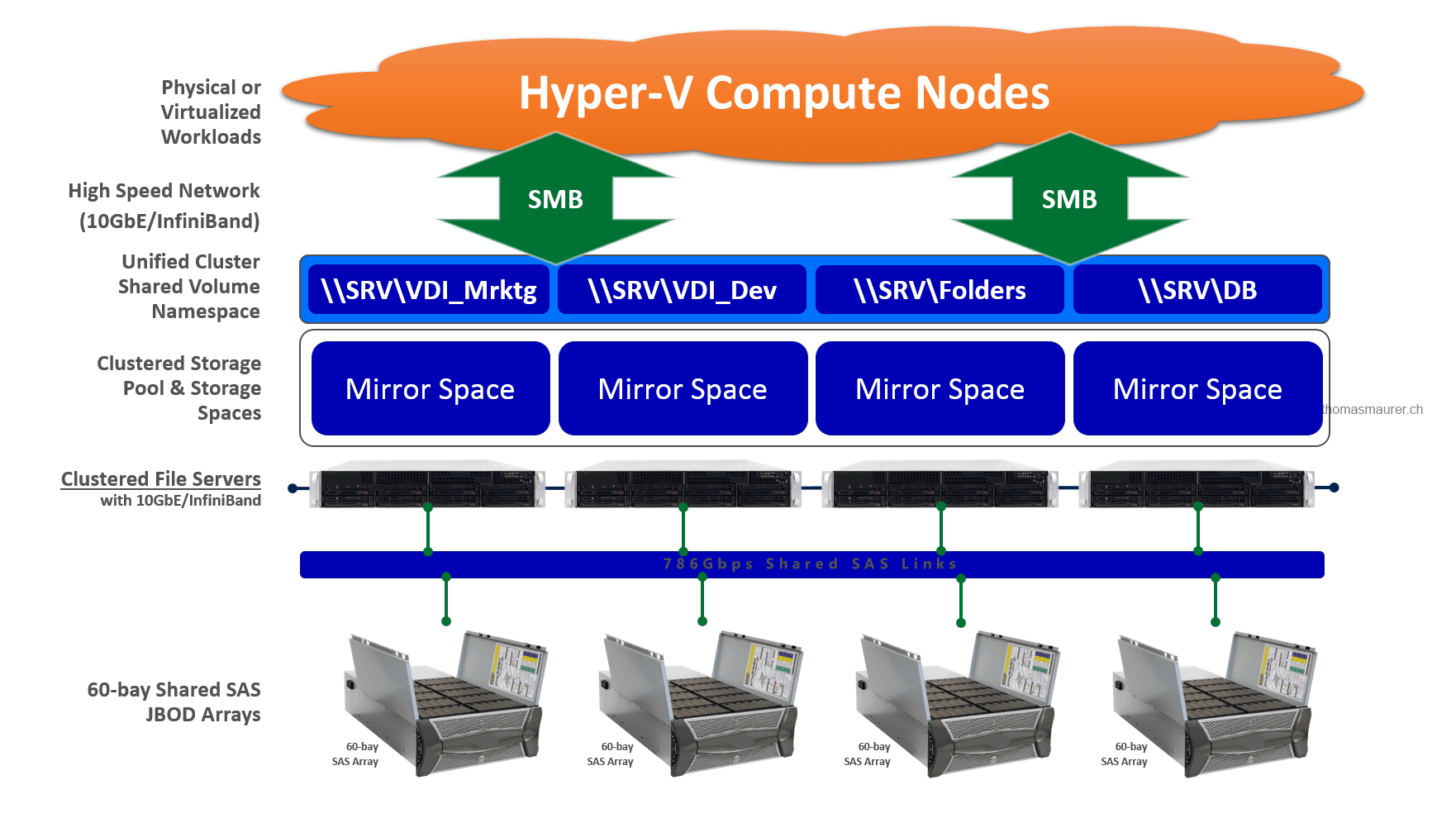

What’s new in Windows Server 2012 R2 Storage Spaces

Microsoft announced the next version of the Windows Server platform called Windows Server 2012 R2...

How to build a iSCSI Target Cluster on Windows Server 2012

In Windows Server 2012 Microsoft introduced the new iSCSI Target which is now build in to Windows Server 2012 which allows you to connect...

Geekmania 2012 Recap

Last Friday I had two session at Geekmania 2012, a the conference for real geeks. Together with other architects and engineers of itnetx...

Windows 8 Consumer Preview: Cannot acces NetApp CIFS share

If you try to connect to a NetApp CIFS share via Windows 8 beta you may cannot access the share because of the following error: SMB...

First KTSI Project done

Now after the something over three months I finished my first project for the 5th KTSI semester. As a project I created a overview of the...