What is Hyper-V over SMB?

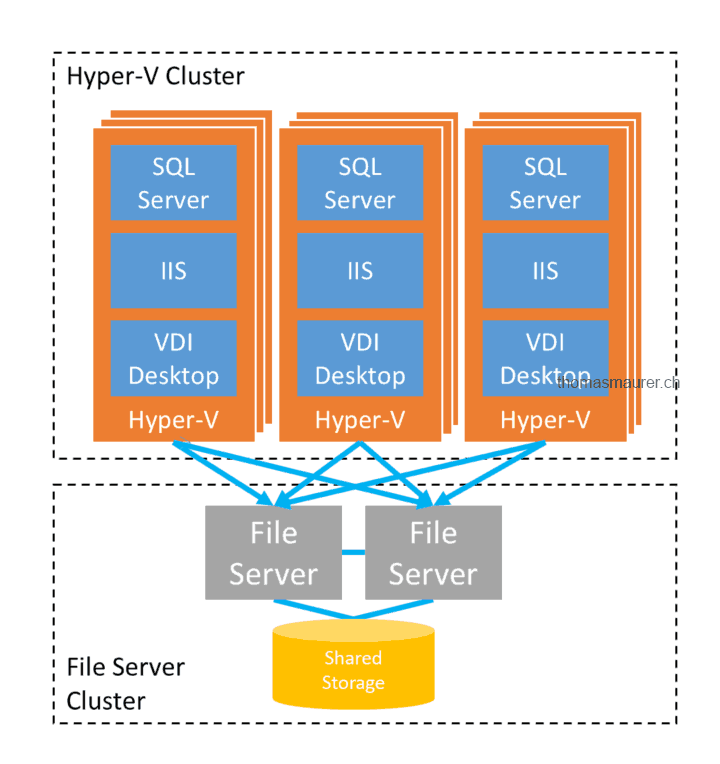

With the release of Windows Server 2012 Microsoft offers a new way to store Hyper-V Virtual Machine...

How to build a iSCSI Target Cluster on Windows Server 2012

In Windows Server 2012 Microsoft introduced the new iSCSI Target which is now build in to Windows Server 2012 which allows you to connect...

Windows Server 2012 Hyper-V Converged Fabric

In Windows Server 2008 R2 we had some really simple configurations and best practices for Hyper-V and network configurations. The problem...

Create a Windows Server 2012 iSCSI Target Server

In my Lab I don’t have a good storage which I can use for my Hyper-V Clusters. But with Windows...

Hyper-V Server: Enable Jumbo Frames on Intel NICs

If you are using iSCSI as storage connection you can win a lot of performance by enabling jumbo frames. It is important that your Storage,...