How to Sync Azure Blob Storage with AzCopy

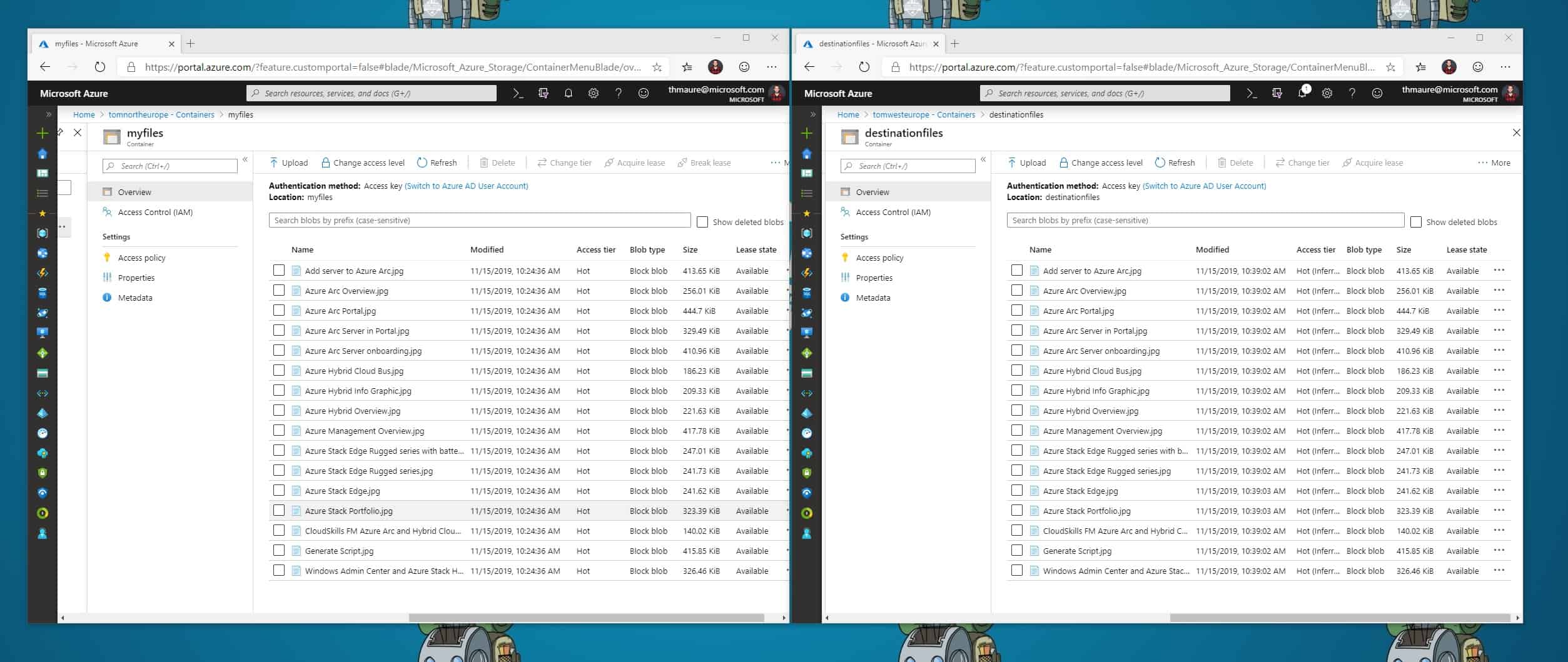

A couple of months ago, I wrote a blog about how you can sync files to Azure Blob storage using...

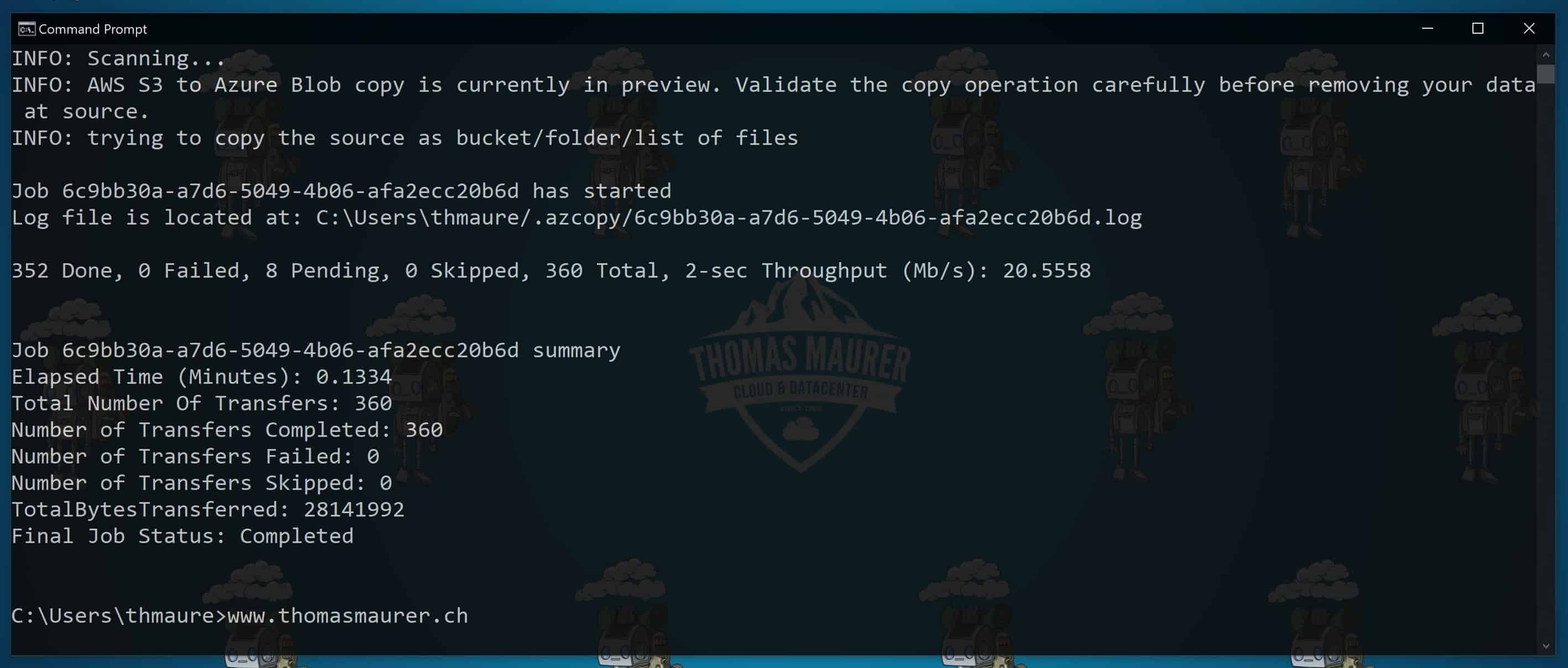

Migrate AWS S3 buckets to Azure blob storage

With the latest version of AzCopy (version 10), you get a new feature which allows you to migrate Amazon S3 buckets to Azure blob storage....

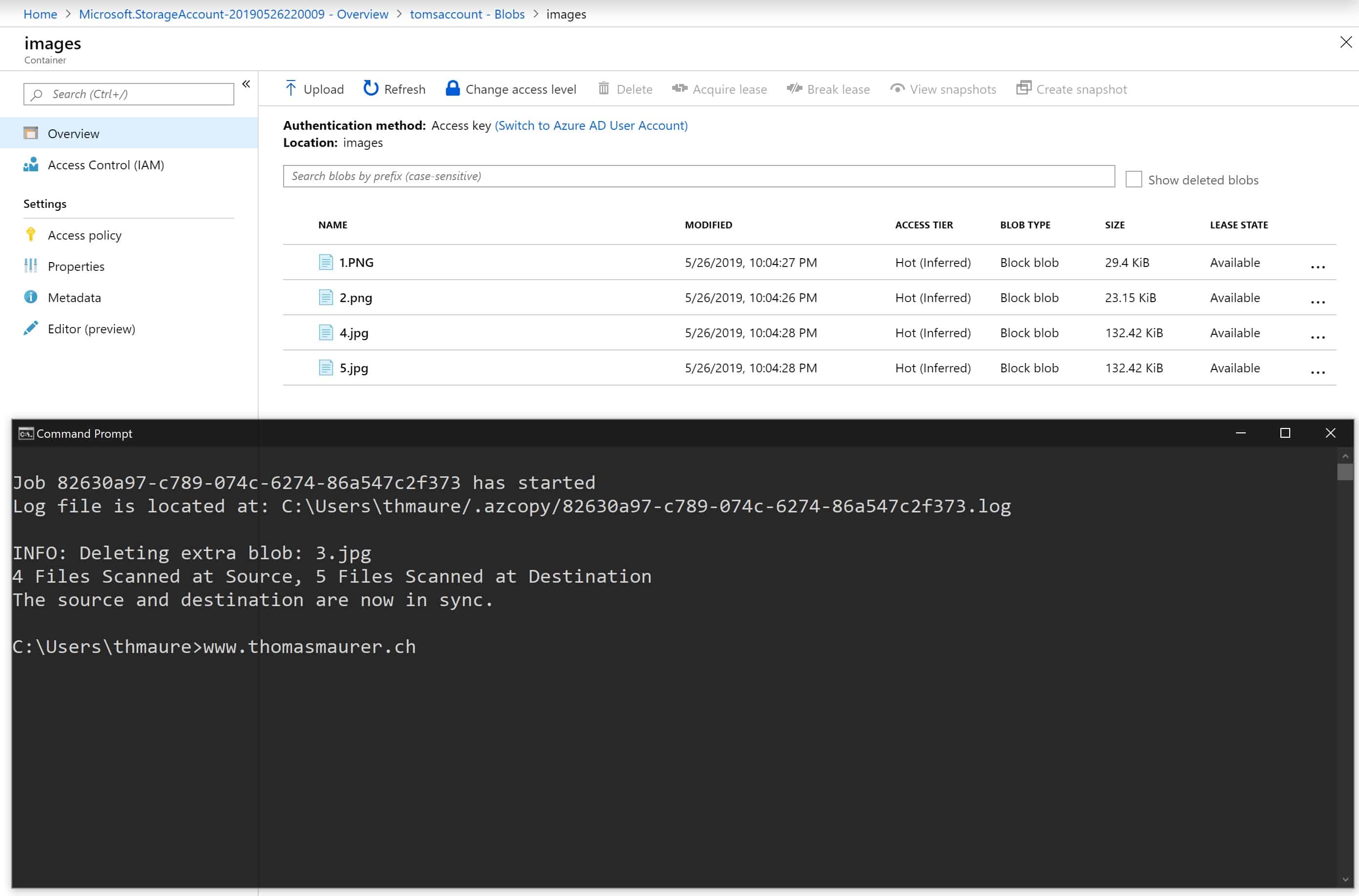

Sync Folder with Azure Blob Storage

With AzCopy v10 the team added a new function to sync folders with Azure Blob Storage. This is great if you have a local folder running on...

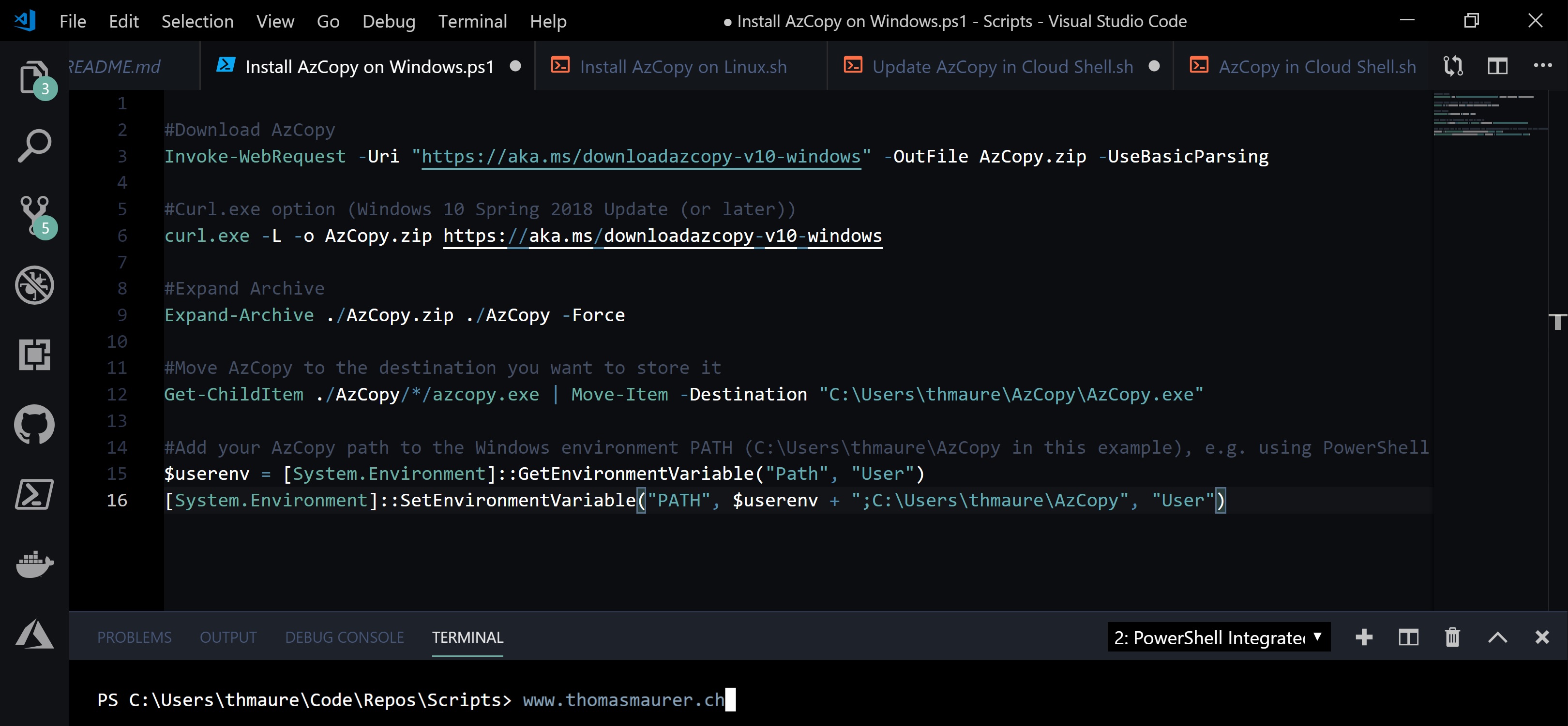

How to Install AzCopy for Azure Storage

AzCopy is a command-line tool to manage and copy blobs or files to or from a storage account. It...

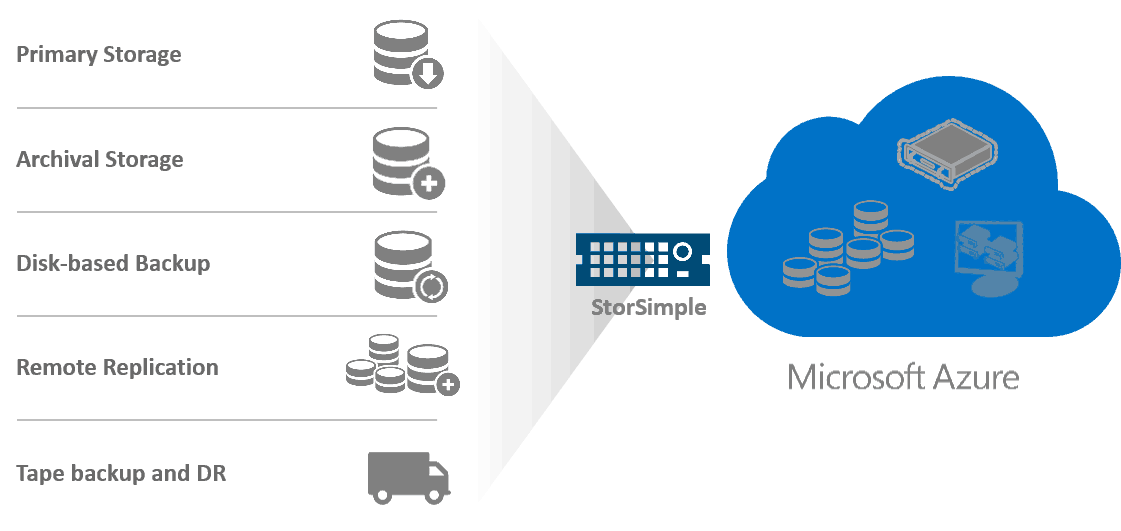

Microsoft Announces Azure StorSimple Hybrid Storage Solutions For The Enterprise

Today Microsoft announced that starting August 1, the will deliver the new StorSimple 8000 series hybrid storage arrays. The StorSimple...

Hyper-V over SMB: Scale-Out File Server and Storage Spaces

On some community pages my blog post started some discussions why you should use SMB 3.0 and why you should use Windows Server as a storage...

Cloud as a Tier with Microsoft StorSimple

Some weeks ago an awesome packet arrived at our office in Bern and I finally have time to write...

The State of Cloud Storage in 2013

Mark Russinovich just posted a tweeted with the Infographic for The State of Cloud Storage in 2013 which compares, Microsoft Windows Azure,...