Today Microsoft announced support for Deduplication in System Center Data Protection Manager (DPM). To use Deduplication together with System Center Data Protection Manager you have to meet the following criteria:

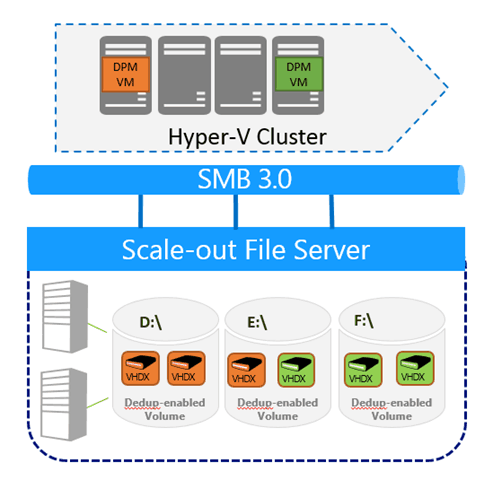

- The Server running System Center Data Protection Manager must be virtualized.

- DPM Server has to use Windows Sevrer 2012 R2

- The Hyper-V Sever where the DPM VM is running on has to be Windows Server 2012 R2

- System Center Data Protection Manager must be version 2012 R2 with Update Rollup 4 (UR4) or higher.

- The Data Storage of the DPM Server must be VHDX Files attached to the DPM VM.

- DPM VHDX files need to be 1TB of size.

- The VHDX files of the DPM Server must be stored on a Scale-Out File Server Cluster using SMB 3.0

- All the Windows File Server nodes on which DPM virtual hard disks reside and on which deduplication will be enabled must be running Windows Server 2012 R2 with Update Rollup November 2014.

- Deduplication is enabled on the CSV of the Scale-Out File Server

- It is recommended to use Storage Spaces but it is not required

- Use Parity Storage Spaces with enclosure-awareness for resiliency and increased disk utilization.

- Format NTFS with 64 KB allocation units and large file record segments to work better with dedup use of sparse files.

- In the hardware configuration above the recommended volume size is 7.2TB volumes.

- Plan and set up DPM and deduplication scheduling

- Tune the File Server Cluster for DPM Storage

The combination of deduplication and DPM provides substantial space savings. This allows higher retention rates, more frequent backups, and better TCO for the DPM deployment. The guidance and recommendations in this document should provide you with the tools and knowledge to configure deduplication for DPM storage and see the benefits for yourself in your own deployment.

To get more information checkout the following TechNet Article: Deduplicating DPM storage

Tags: Backup, Cloud, CloudOS, Data, Data Protection, Data Protection Manager, Deduplication, DPM, Hyper-V, Microsoft, SCDPM, Storage, System Center, System Center 2012 R2, Update Rollup 4, UR4 Last modified: June 26, 2019

Highly anticipated feature, very glad it’s finally available. A couple questions:

– do you perhaps have any information as to why 1TB VHDX are required (or is it just a recommendation)?,

– is storing VHDX files of the DPM Server on a Scale-Out File Server Cluster really a requirement, it appears to be more of a recommendation: “Q: It looks as though DPM storage VHDX files must be deployed on remote SMB file shares only. What will happen if I store the backup VHDX files on dedup-enabled volumes on the same system where the DPM virtual machine is running?

A: As discussed above, DPM, Hyper-V and dedup are storage and compute intensive operations. Combining all three of them in a single system can lead to I/O and process intensive operations that could starve Hyper-V and its VMs. If you decide to experiment configuring DPM in a VM with the backup storage volumes on the same machine, you should monitor performance carefully to ensure that there is enough I/O bandwidth and compute capacity to maintain all three operations on the same machine.”

the 1TB is a recommend max. Amount.

Using sof as well. Its always recommend to dedup volume on sofs to avoid troubles on the hyper-v system itself to separate computing and dedup stuff.

One potential use-case for me would become use a CiB product to house storage for backups to accommodate the SoFS and compute requirements.

Do we know how much reduction to expect as yet, and whether dedup would be effective on VTL applications like firestreamer?

Thank you Enrico, I actually discussed this with Senior Program Manager – System Center DPM and he confirmed it. Basically saying no other workload is allowed on the Hyper-V servers, but they’re looking into perhaps expanding this in the future.

Our Testing Environments are pretty much different and I can say that is possible to expand everything Microsoft says.

To avoid troubles you wouldn’t do anything besides the supported way in productive Environments but we have heaps of smaller Servers with unsupported configurations (mostly less important third Level Systems or testing Environments) and they running like a charm. :)

Since we have heaps of IOs due Flash based storage and RAM we could see a dramatic Impact on dedup and performance boosts withing file or clustered services. It’s amazing… ;)

Virtualized backups are running now since introducing 2012 R2 and there’s no Problem since then at all. :)

increasing Performance a bit with Setting up a higher amount of used ram.

https://virtual-ops.de/?p=481

btw.

Leaving the DPM Machines running on the same storage depends…

VDI Environments usually avoid the Pagefile.sys on the same storage as the VM is running.

So usually the Pagefile.sys will be seperated from the VM itself und won’t be deduped.

But… If you have a high IO storage System you could leave it there.

We do that but like I said… Flash based Systems with high max IO

If you have HDD based storage, try to seperate the Pagefile.sys as the recommandations from VDI Environments suggest.

@ craig jones

It depends on your workload but within typcial Windows Environments you should get easily more than 40% dedup rate. I can see that typcial fileservers getting 40 to 60% and Windows Environment backups climb up to 70 or 80%.

I don’t have experiences with Firestreamer but QuadstorVTL which is Linux based OpenSource.

The dedup rate is pretty much the same but you have to Play around a bit.

Recommendations for VTLs are usually:

don’t use compression from the VTL itself or your prefered backup solution.

But there are considerations.

QuadstorVTL for example has a built in dedup Feature as well.

We use DPM 2012/R2 and virtulalized backups. So … we don’t use compression or dedup from any product on top.

We only use the Serer 2012 R2 dedup Feature.

the built in dedup uses a high amount of RAM and so we would have to set up all machines with high amount of RAM.

If you stream your VTL stuff to another Location you could get better results.

I hop you get the Picture.

Maybe I should write an artcile about the different Scenarios we tested…?! ;)

How about long-term storage. How does this fit into this design? We have a direct-attached fiber ATL.