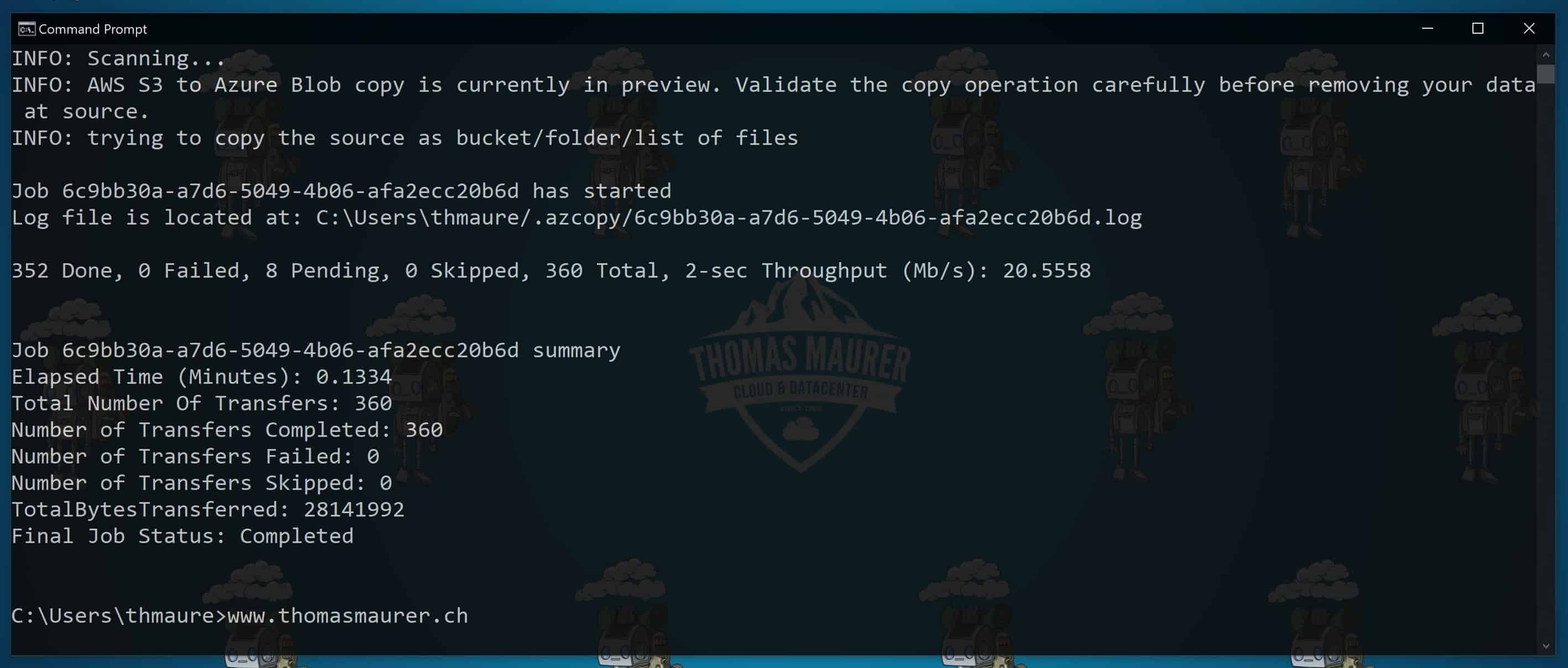

Migrate AWS S3 buckets to Azure blob storage

With the latest version of AzCopy (version 10), you get a new feature which allows you to migrate...

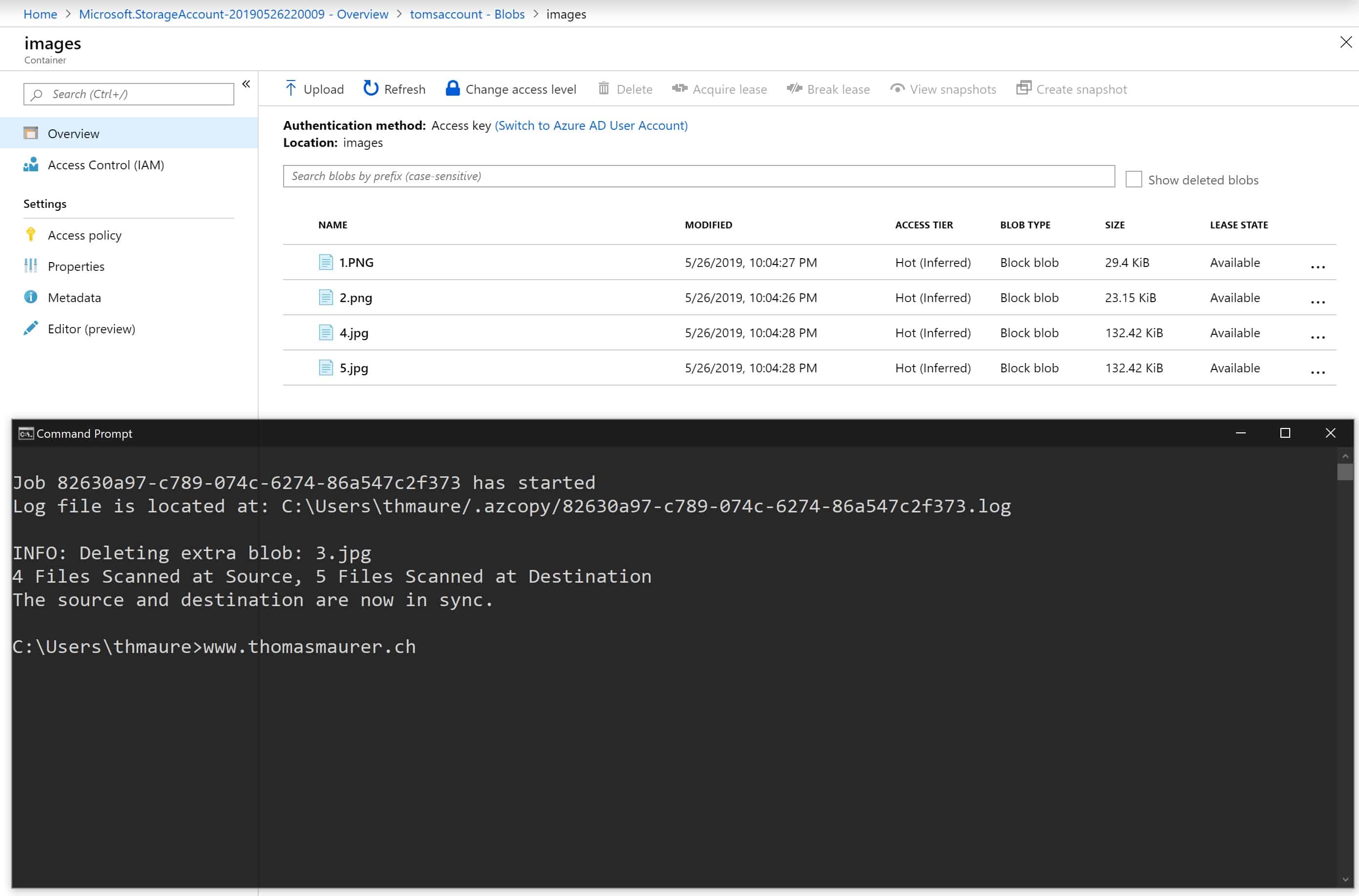

Sync Folder with Azure Blob Storage

With AzCopy v10 the team added a new function to sync folders with Azure Blob Storage. This is great if you have a local folder running on...

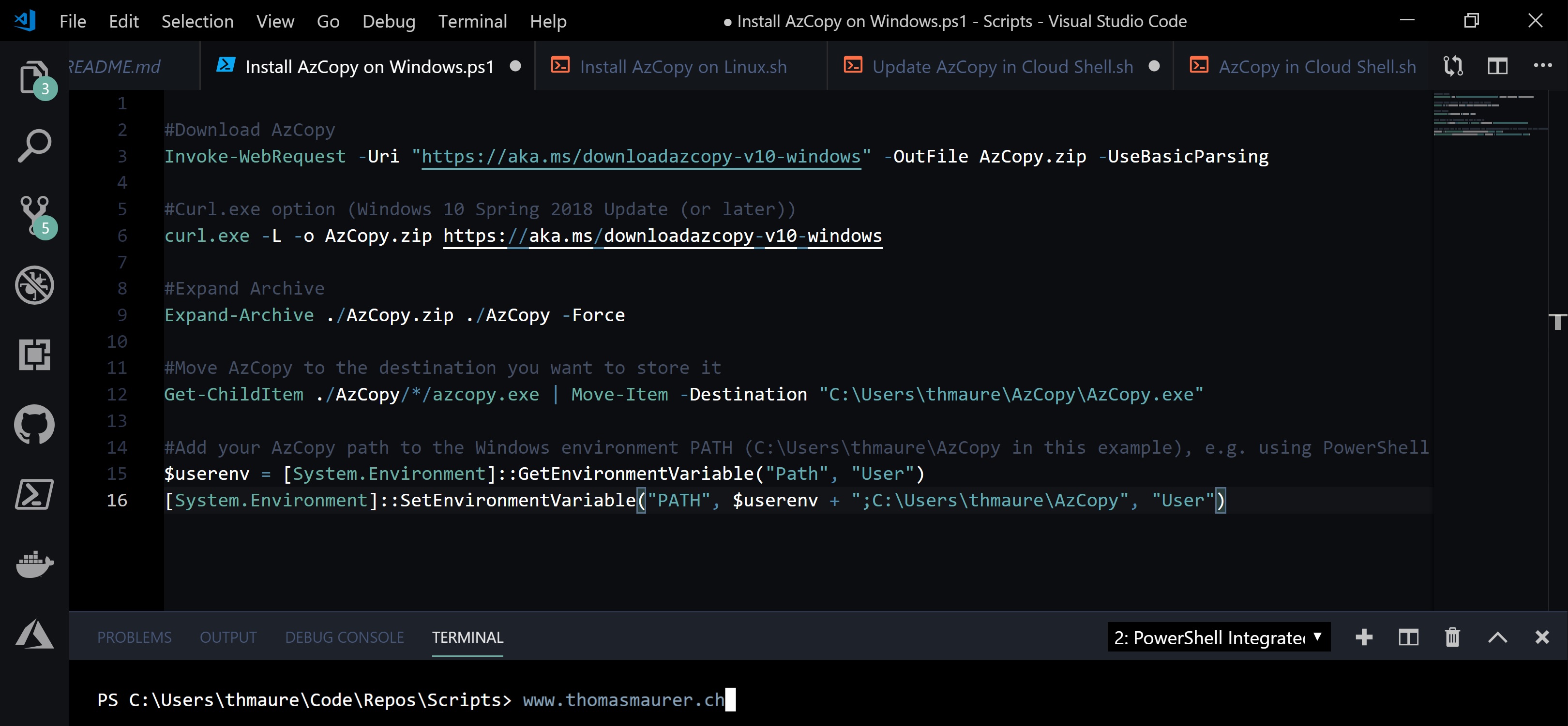

How to Install AzCopy for Azure Storage

AzCopy is a command-line tool to manage and copy blobs or files to or from a storage account. It also allows you to sync storage accounts...

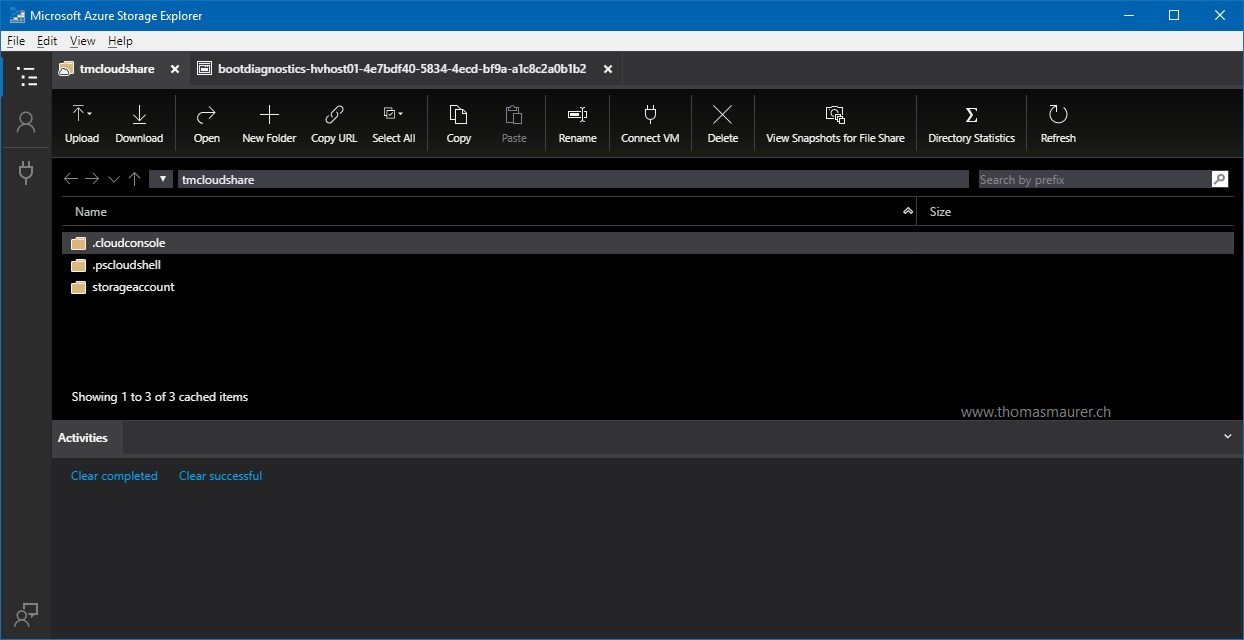

Microsoft quietly released Azure Storage Explorer 1.0.0

Microsoft quietly released Azure Storage Explorer 1.0.0 back in April. There was not a lot of noise...